Guide: Setting up JSON validation

Track |

|---|

This guide walks you through the points to consider when setting up JSON validation and the steps to bring your validation service online.

What you will achieve

At the end of this guide you will have understood what you need to consider when starting to implement validation services for your JSON-based specification. You will also have gone through the steps to bring it online and make it available to your users.

An JSON validation service can be created using multiple approaches depending on your needs. You can have an on-premise (or local to your workstation) service through Docker or use the Test Bed’s resources and, with minimal configuration, bring online a public service that is automatically kept up-to-date.

For the purpose of this guide you will be presented the options to consider and start with a Docker-based instance that could be replaced (or complemented) by a setup through the Test Bed. Interestingly, the configuration relevant to the validator is the same regardless of the approach you choose to follow.

What you will need

About 30 minutes.

A text editor.

A web browser.

Access to the Internet.

Docker installed on your machine (only if you want to run the validator as a Docker container).

A basic understanding of JSON and JSON schema. A good source for more information here is the Understanding JSON schema tutorial site.

How to complete this guide

The steps described in this guide are for the most part hands-on, resulting in you creating a fully operational validation service. For these practical steps there are no prerequisites and the content for all files to be created are provided in each step. In addition, if you choose to try your setup as a Docker container you will also be issuing commands on a command line interface (all commands are provided and explained as you proceed).

Steps

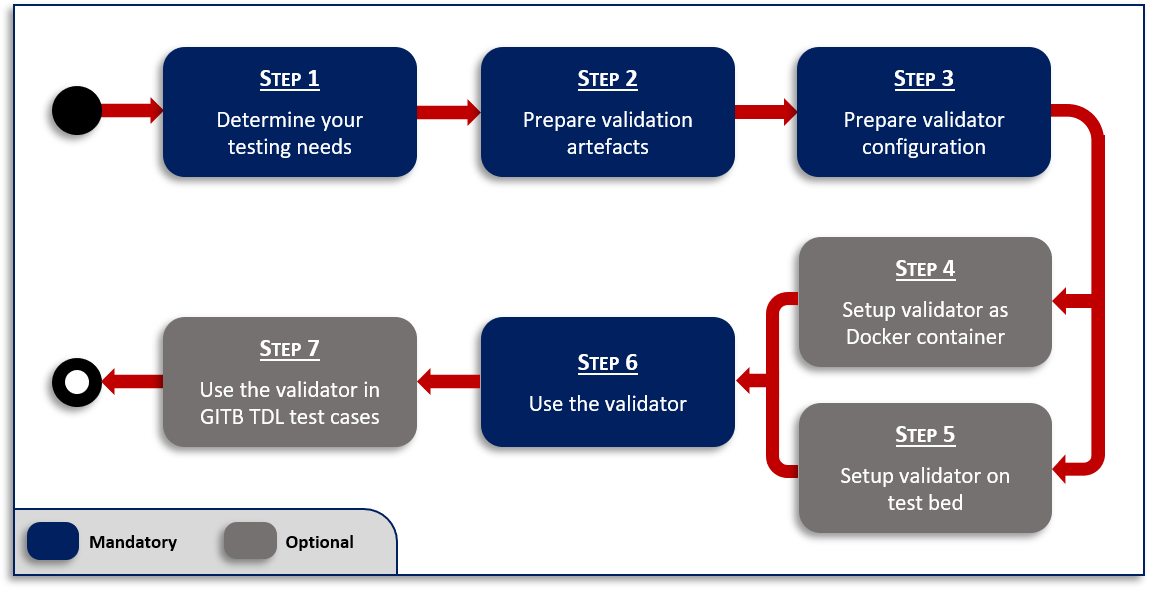

You can complete this guide by following the steps described in this section. Not all steps are required, with certain ones being optional or complementary depending on your needs. The following diagram presents an overview of all steps highlighting the ones that apply in all cases (marked as mandatory):

When and why you should skip or consider certain steps depends on your testing needs. Each step’s description covers the options you should consider and the next step(s) to follow depending on your choice.

Step 1: Determine your testing needs

Before proceeding to setup your validator you need to clearly determine your testing needs. A first outline of the approach to follow would be provided by answering the following questions:

Will the validator be available to your users as a tool to be used on an ad-hoc basis?

Do you plan on measuring the conformance of your community’s members to the JSON-based specification?

Is the validator expected to be used in a larger conformance testing context (e.g. during testing of a message exchange protocol)?

Should the validator be publicly accessible?

Should test data and validation reports be treated as confidential?

The first choice to make is on the type of solution that will be used to power your validation service:

Standalone validator: A service allowing validation of JSON content based on a predefined configuration of JSON schemas The service supports fine-grained customisation and configuration of different validation types (e.g. specification versions) and supported communication channels. Importantly, use of the validator is anonymous and it is fully stateless in that none of the test data or validation reports are maintained once validation completes.

Complete Test Bed: The Test Bed is used to realise a full conformance testing campaign. It supports the definition of test scenarios as test cases, organised in test suites that are linked to specifications. Access is account-based allowing users to claim conformance to specifications and execute in a self-service manner their defined test cases. All results are recorded to allow detailed reporting, monitoring and eventually certification. Test cases can address JSON validation but are not limited to that, allowing validation of any complex exchange of information.

It is important to note that these two approaches are by no means exclusive. It is often the case that a standalone validator is defined as a first step that is subsequently used from within test cases in the Test Bed. The former solution offers a community tool to facilitate work towards compliance supporting ad-hoc data validation, whereas the latter allows for rigorous conformance testing to take place where proof of conformance is required. This could apply in cases where conformance is a qualification criterion before receiving funding or before being accepted as a partner in a distributed system. Finally, it is interesting to consider that non-trivial JSON validation may involve multiple validation artefacts (e.g. different schemas for different message types). In such a case, even if ad-hoc data validation is not needed, defining a separate validator simplifies management of the validation artefacts by consolidating them in a single location, as opposed to bundling them within test suites.

Regardless of the choice of solution, the next point to consider will be the type of access. If public access is important then the obvious choice is to allow access over the Internet. An alternative would be an installation that allows access only through a restricted network, be it an organisation’s internal network or a virtual private network accessible only by your community’s members. Finally, an extreme case would be access limited to individual workstations where each community member would be expected to run the service locally (albeit of course without the expectation to test message exchanges with remote parties).

If access to your validation services over the Internet is preferred or at least acceptable, the simplest case is to opt for using the shared DIGIT Test Bed resources, both regarding the standalone validator and the Test Bed itself. If such access is not acceptable or is technically not possible (e.g. access to private resources is needed), the proposed approach would be to go for a Docker-based on-premise installation of all components.

Summarising the options laid out in this section, you will first want to choose:

Whether you will be needing a standalone validator, a complete Test Bed or both.

Whether the validator and/or Test Bed will be accessible over the Internet or not.

Your choices here can help you better navigate the remaining steps of this guide. Specifically:

Step 2: Prepare validation artefacts and Step 3: Prepare validator configuration can be skipped if you just want a quick deployment for testing with a generic validator that allows you to upload your own schemas before validating.

Step 4: Setup validator as Docker container can be skipped if you are interested only in a public service or if you plan to only use the validator as part of conformance testing scenarios (i.e. within the Test Bed).

Step 5: Setup validator on Test Bed can be skipped if a publicly accessible service is not an option for you.

Step 7: Use the validator in GITB TDL test cases can be skipped if you only want data validation without additional conformance testing scenarios.

Step 2: Prepare validation artefacts

As an example case for JSON validation we will consider a variation of the EU purchase order case first seen in Guide: Creating a test suite. In short, for the purposes of this guide you are considered to be leading an EU cross-border initiative to define a new common specification for the exchange of purchase orders between retailers.

To specify the content of purchase orders your experts have created the following JSON schema:

{

"$id": "http://itb.ec.europa.eu/sample/PurchaseOrder.schema.json",

"$schema": "http://json-schema.org/draft-07/schema#",

"description": "A JSON representation of EU Purchase Orders",

"type": "object",

"required": [ "shipTo", "billTo", "orderDate", "items" ],

"properties": {

"orderDate": { "type": "string" },

"shipTo": { "$ref": "#/definitions/address" },

"billTo": { "$ref": "#/definitions/address" },

"comment": { "type": "string" },

"items": {

"type": "array",

"items": { "$ref": "#/definitions/item" },

"minItems": 1,

"additionalItems": false

}

},

"definitions": {

"address": {

"type": "object",

"properties": {

"name": { "type": "string" },

"street": { "type": "string" },

"city": { "type": "string" },

"zip": { "type": "number" }

},

"required": ["name", "street", "city", "zip"]

},

"item": {

"type": "object",

"properties": {

"partNum": { "type": "string" },

"productName": { "type": "string" },

"quantity": { "type": "number", "minimum": 0 },

"priceEUR": { "type": "number", "minimum": 0 },

"comment": { "type": "string" }

},

"required": ["partNum", "productName", "quantity", "priceEUR"]

}

}

}

Based on this, a sample purchase order would be as follows:

{

"shipTo": {

"name": "John Doe",

"street": "Europa Avenue 123",

"city": "Brussels",

"zip": 1000

},

"billTo": {

"name": "Jane Doe",

"street": "Europa Avenue 210",

"city": "Brussels",

"zip": 1000

},

"orderDate": "2020-01-22",

"comment": "Send in one package please",

"items": [

{

"partNum": "XYZ-123876",

"productName": "Mouse",

"quantity": 20,

"priceEUR": 15.99,

"comment": "Confirm this is wireless"

},

{

"partNum": "ABC-32478",

"productName": "Keyboard",

"quantity": 15,

"priceEUR": 25.50

}

]

}

A first obvious validation for purchase orders would be against the defined JSON schema. However, your business requirements also define the concept of a large purchase order which is one that includes more than 10 of each ordered item. This restriction is not reflected in the JSON schema which is considered as a base for all purchase orders but rather in a separate JSON schema file that checks this only for orders that are supposed to be “large”. Such a rule file would be as follows:

{

"$id": "http://itb.ec.europa.eu/sample/PurchaseOrder-large.schema.json",

"$schema": "http://json-schema.org/draft-07/schema#",

"description": "Business rules for large EU Purchase Orders expressed in JSON",

"type": "object",

"required": [ "items" ],

"properties": {

"items": {

"type": "array",

"items": {

"type": "object"

},

"minItems": 10

}

}

}

As you see in the content of the two schemas, the first one defines the structure of the expected JSON objects and their properties, whereas the second one does not replicate structural checks, focusing only on the number of items. In this case a valid large purchase order would be expected to validate against both schemas.

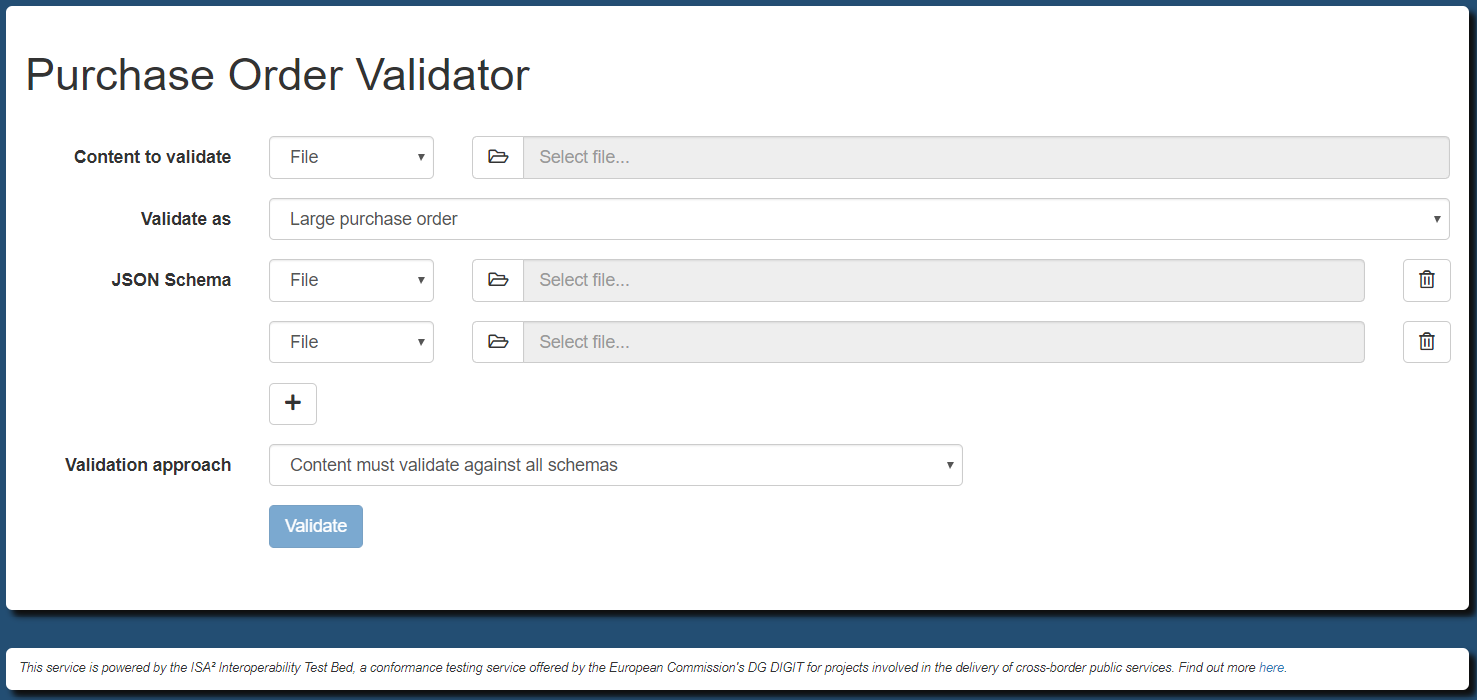

Given these requirements and validation artefacts we want to support two types of validation (or profiles):

basic: For all purchase orders acting as a common base. This is realised by validating against

PurchaseOrder.schema.json.large: For large purchase orders. This is realised by validating against

PurchaseOrder.schema.jsonandPurchaseOrder-large.schema.json.

As the first configuration step for the validator we will prepare a folder with the required resources. For this purpose create a root

folder named validator with the following subfolders and files:

validator

└── resources

└── order

└── schemas

├── PurchaseOrder.schema.json

└── PurchaseOrder-large.schema.json

Regarding the PurchaseOrder.schema.json and PurchaseOrder-large.schema.json files you can create them from the above content or download them

(here: PurchaseOrder.schema.json and

PurchaseOrder-large.schema.json). Finally, note that you are free to use any names for the

files and folders; the ones used here will however be the ones considered in this guide’s subsequent steps.

Step 3: Prepare validator configuration

After having defined your testing needs and the validation artefacts for your specific case, the next step will be to configure the validator. The validator is defined by a core engine maintained by the Test Bed team and a layer of configuration, provided by you, that defines its use for a specific scenario. In terms of features the validator supports the following:

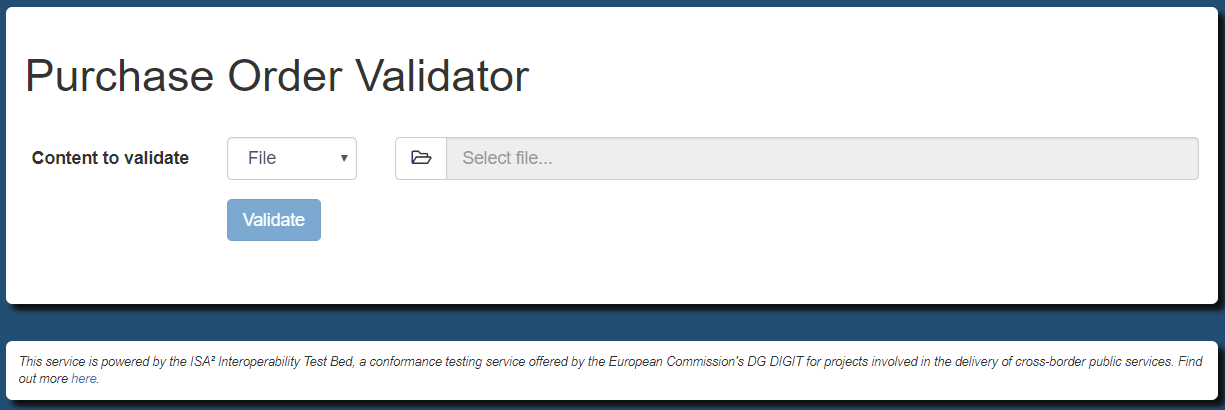

Validation channels including a REST web service API, a SOAP web service API, a web user interface and a command-line tool.

Configuration of JSON schemas to drive the validation that can be local or remote.

Definition of different validation types as logically-related sets of validation artefacts.

Support per validation type allowing user-provided schemas.

Definition of separate validator configurations that are logically split but run as part of a single validator instance. Such configurations are termed “validation domains”.

Customisation of all texts presented to users.

Configuration is provided by means of key-value pairs in a property file. This file can be named as you want but needs to

end with the .properties extension. In our case we will name this config.properties and place it within the order folder.

Recall that the purpose of this folder is to store all resources relevant to purchase order validation. These are the validation

artefacts themselves (PurchaseOrder.schema.json and PurchaseOrder-large.schema.json) and the configuration file (config.properties).

Define the content of the config.properties file as follows:

# The different types of validation to support. These values are reflected in other properties.

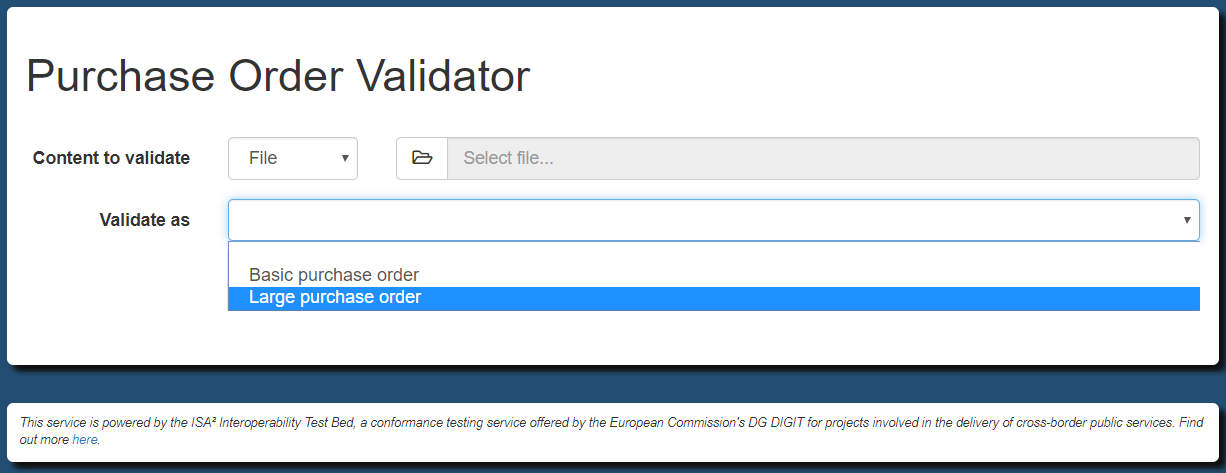

validator.type = basic, large

# Labels to describe the defined types.

validator.typeLabel.basic = Basic purchase order

validator.typeLabel.large = Large purchase order

# Validation artefacts (JSON schema) to consider for the "basic" type.

validator.schemaFile.basic = schemas/PurchaseOrder.schema.json

# Validation artefacts (JSON schema) to consider for the "large" type.

validator.schemaFile.large = schemas/PurchaseOrder.schema.json, schemas/PurchaseOrder-large.schema.json

# The title to display for the validator's user interface.

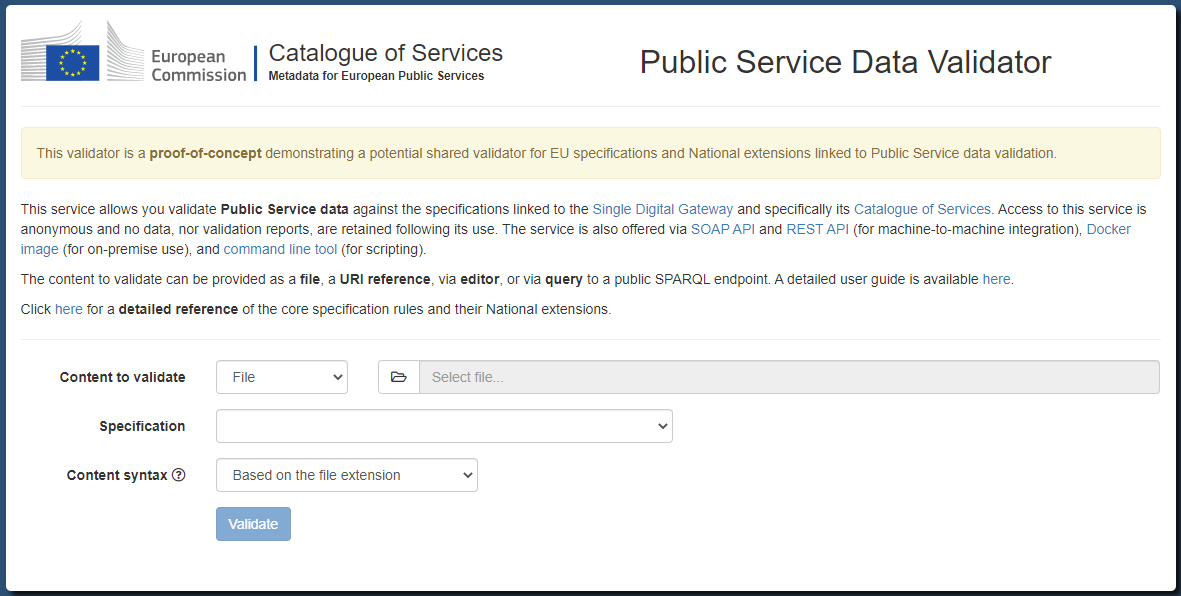

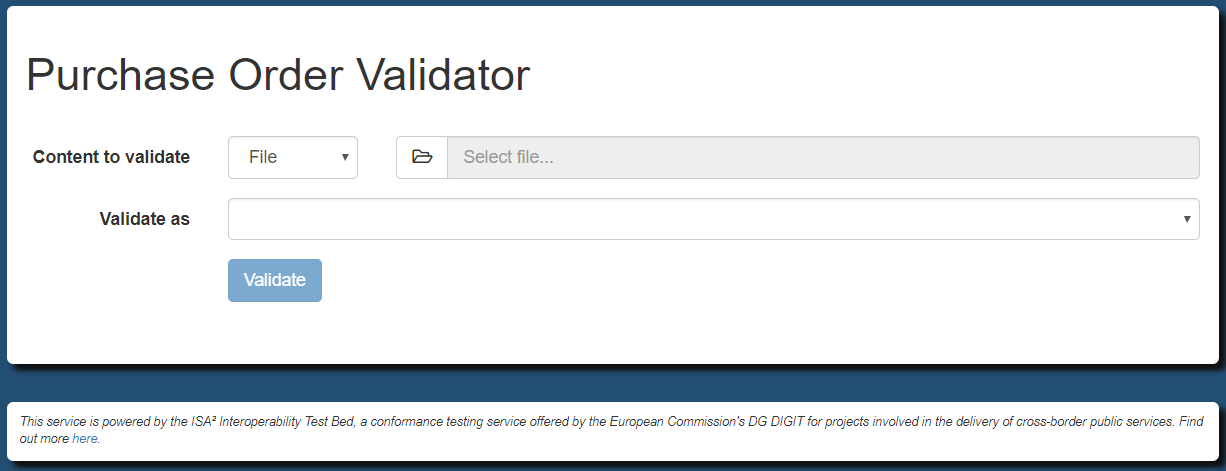

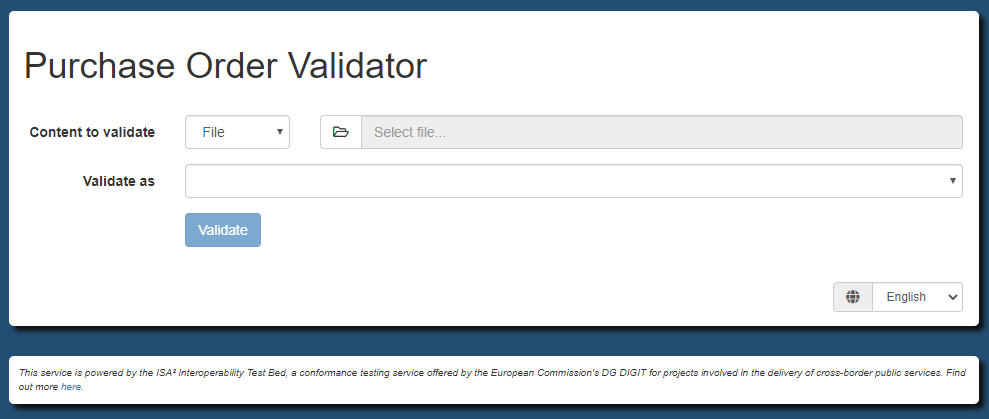

validator.uploadTitle = Purchase Order Validator

All validator properties share a validator. prefix. The validator.type property is key as it defines one or more types of

validation that will be supported (multiple are provided as a comma-separated list of values). The values provided here are important

not only because they define the available validation types but also because they drive most other configuration properties. Regarding

the validation artefacts themselves, these are provided by means of the validator.schemaFile properties per validation type:

validator.schemaFile.TYPEdefines one or more (comma-separated) file paths (relative to the configuration file) to lookup schema files.

Using these properties you define the validator’s validation artefacts as local files, where provided paths can be for a file or a folder. If a folder is referenced it will load all contained top-level files (i.e. ignoring subfolders).

Note

Further validation artefact configuration: You may also define validation artefacts as remote resource references and/or as being user-provided.

The purpose of the remaining properties is to customise the text descriptions presented to users:

validator.typeLabeldefines a label to present to users on the validator’s user interface for the type in question.validator.uploadTitledefines the title label to present to users on the validator’s user interface.

Once you have created the config.properties file, the validator folder should be as follows:

validator

└── resources

└── order

├── schemas

│ ├── PurchaseOrder.schema.json

│ └── PurchaseOrder-large.schema.json

└── config.properties

When you are defining multiple schema files for a given validation type you may also want to specify how these are combined. This is done by

means of the validator.schemaFile.TYPE.combinationApproach property (for a given TYPE) that accepts of of three values:

allOf: Content must validate against all defined schemas. This is the default if not specified.anyOf: Content must validate against any of the defined defined schemas.oneOf: Content must validate against one, and only one, of the defined schemas.

In our configuration for large purchase orders we define two schemas which by default are applied with allOf semantics. If the schemas were rather two possible

alternatives a anyOf value would be more appropriate. This would be configured as follows:

validator.type = basic, large

...

validator.schemaFile.large = schemas/PurchaseOrder.schema.json, schemas/PurchaseOrder-large.schema.json

validator.schemaFile.large.combinationApproach = anyOf

The limited configuration file we have prepared assumes numerous default configuration properties. An important example is that by default, the validator

will expose a web user interface, SOAP web service API and REST web service API. This configuration is driven through the validator.channels property that

by default is set to form, soap_api, rest_api (for a user form and SOAP web service respectively). All configuration properties provided in

config.properties relate to the specific domain in question, notably purchase orders, reflected in the validator’s resources as the

order folder. Although rarely needed, you may define additional validation domains each with its own set of validation artefacts and

configuration file (see Configuring additional validation domains for details on this). Finally, if you

are planning to host your own validator instance you can also define configuration at the level of the complete validator

(see Additional configuration options regarding application-level configuration options).

For the complete reference of all available configuration properties and their default values refer to section Validator configuration properties.

Remote validation artefacts

Defining the validator’s artefacts as local files is not the only option. If these are available online you can also reference them remotely

by means of property validator.schemaFile.TYPE.remote.N.url. The N element in the properties’ names is a zero-based positive integer allowing you to define more

than one entries to match the number of remote files.

The example that follows illustrates the loading of two remote schemas for a validation type named v2.2.1 from a remote location:

validator.type = v2.2.1

...

validator.schemaFile.v2.2.1.remote.0.url = https://my.server.com/my_schema_1.json

validator.schemaFile.v2.2.1.remote.1.url = https://my.server.com/my_schema_2.json

In case remote schemas fail to be retrieved, you may choose to report this to your users. This is achieved by using property

validator.validator.remoteArtefactLoadErrors.TYPE to adapt this for a given validation type, or validator.validator.remoteArtefactLoadErrors

to set your default approach (see Domain-level configuration). The values you may set are:

fail, to log the error, immediately stop validation and report this as an error to the user.warn, to log the error, continue validation, but display a warning to the user that the results may be incomplete.log, considered by default, to log the error but continue validation normally without notifying the user.

You may also combine local and remote schema files by defining a validator.schemaFile.TYPE property and one or more validator.schemaFile.TYPE.remote.N.url

properties. In all cases, the schemas from all sources will applied for the validation.

Note

Remote schema caching: Caching is used to avoid constant lookups of remote schema files. Once loaded, remote schemas will be automatically refreshed every hour.

Reuse schema definitions in other schemas

When a JSON specification defines multiple types of content, validation would typically be driven through multiple schemas. As the number of schemas grows, you will likely encounter common definitions that you would like to define once and reuse when needed.

The JSON Schema specification foresees the

$ref keyword for this purpose

that allows you to define a given property based on a sub-schema. Such sub-schemas are typically included within the

schema itself resulting in an internal reference. We already saw this in our Purchase Order example

by defining sub-schemas for addresses (#/definitions/address) and order items (#/definitions/item):

{

"$id": "http://itb.ec.europa.eu/sample/PurchaseOrder.schema.json",

"$schema": "http://json-schema.org/draft-07/schema#",

"description": "A JSON representation of EU Purchase Orders",

"type": "object",

"required": [ "shipTo", "billTo", "orderDate", "items" ],

"properties": {

"orderDate": { "type": "string" },

"shipTo": { "$ref": "#/definitions/address" },

"billTo": { "$ref": "#/definitions/address" },

"comment": { "type": "string" },

"items": {

"type": "array",

"items": { "$ref": "#/definitions/item" },

"minItems": 1,

"additionalItems": false

}

},

"definitions": {

"address": {

"type": "object",

"properties": {

"name": { "type": "string" },

"street": { "type": "string" },

"city": { "type": "string" },

"zip": { "type": "number" }

},

"required": ["name", "street", "city", "zip"]

},

"item": {

"type": "object",

"properties": {

"partNum": { "type": "string" },

"productName": { "type": "string" },

"quantity": { "type": "number", "minimum": 0 },

"priceEUR": { "type": "number", "minimum": 0 },

"comment": { "type": "string" }

},

"required": ["partNum", "productName", "quantity", "priceEUR"]

}

}

}

When defining multiple schemas the above approach does not suffice as you would want to share definitions across schemas.

To illustrate this we will consider the address definition, which we will extract into its own schema to be referenced

from other schemas. We will place this in a common folder which will be considered as the place for all such shared schemas:

validator

└── resources

└── order

└── schemas

├── common

│ └── Address.schema.json

└── PurchaseOrder.schema.json

The content of the Address.schema.json is defined as follows:

{

"$id": "http://itb.ec.europa.eu/sample/Address.schema.json",

"$schema": "http://json-schema.org/draft-07/schema#",

"description": "A JSON representation of addresses",

"type": "object",

"properties": {

"name": { "type": "string" },

"street": { "type": "string" },

"city": { "type": "string" },

"zip": { "type": "number" }

},

"required": ["name", "street", "city", "zip"]

}

Note the $id of the schema with value http://itb.ec.europa.eu/sample/Address.schema.json. To reference this schema

you refer to this identifier in the relevant $ref properties as illustrated below:

{

"$id": "http://itb.ec.europa.eu/sample/PurchaseOrder.schema.json",

"$schema": "http://json-schema.org/draft-07/schema#",

"description": "A JSON representation of EU Purchase Orders",

"type": "object",

"required": [ "shipTo", "billTo", "orderDate", "items" ],

"properties": {

"orderDate": { "type": "string" },

"shipTo": { "$ref": "http://itb.ec.europa.eu/sample/Address.schema.json" },

"billTo": { "$ref": "http://itb.ec.europa.eu/sample/Address.schema.json" },

"comment": { "type": "string" },

"items": {

"type": "array",

"items": { "$ref": "#/definitions/item" },

"minItems": 1,

"additionalItems": false

}

},

"definitions": {

"item": {

"type": "object",

"properties": {

"partNum": { "type": "string" },

"productName": { "type": "string" },

"quantity": { "type": "number", "minimum": 0 },

"priceEUR": { "type": "number", "minimum": 0 },

"comment": { "type": "string" }

},

"required": ["partNum", "productName", "quantity", "priceEUR"]

}

}

}

Instead of http://itb.ec.europa.eu/sample/Address.schema.json you may use Address.schema.json, thus

omitting the base path of http://itb.ec.europa.eu/sample/. If no base path is provided, the one from the parent

schema is considered by default.

The last step to make this reference work is to tell the validator which schemas should be considered as reusable. The

JSON Schema specification leaves the support for such schema reuse open to validator implementations, which in the case

of the Test Bed’s JSON validator, is achieved through property validator.referencedSchemas.

You use this property to define a comma separated set of paths (files or directories) relative to your domain’s root folder. Each of these paths points to a given JSON schema that you want to flag as being eligible for reuse. In the case a directory is provided, all JSON schemas contained (recursively) within it will be considered. For the resulting set of JSON schemas, the validator will determine their identifiers (property $id) which is how they will be referenced by other schemas.

In our case recall that we defined a common folder to contain all reusable schemas. We thus need to update our

domain configuration file to define it as such:

...

# Set the common folder as the root of all shared schemas.

# We could also have pointed to the individual file(s) (e.g. schemas/common/Address.schema.json).

validator.referencedSchemas = schemas/common

Finally it may be interesting to note that when referencing another schema you may also refer to its own sub-schemas.

If for example we would want to reuse the definition of items from the PurchaseOrder.schema.json schema we would need

to first add it as a reusable schema …

...

validator.referencedSchemas = schemas/common, schemas/PurchaseOrder.schema.json

… and then reference its internal item definition it as follows:

"item": { "$ref": "PurchaseOrder.schema.json#/definitions/item" }

Mapping remote schemas to local copies

In the previous section you saw that you can reuse your schema definitions by designating a set of referenced schemas. Besides serving to reuse schema definitions across your own schemas, you can use the same approach to provide local copies for remote schemas, thus avoiding remote lookups. This could be interesting in case your validator cannot access online resources, or to make schema lookup as efficient as possible.

To provide a local copy for a remote schema, simply place a copy of the schema in a folder and configure that folder

in property validator.referencedSchemas, providing its relative path with respect to the domain root. In this property

you may also refer to individual schema files, and also include multiple paths by comma-separating them. To have a

schema loaded locally, the $ref property used to refer to it (when in absolute form), must match the schema’s $id property.

In the following example, consider a shipTo property that is defined by a remote address schema:

...

"properties": {

"shipTo": { "$ref": "https://myproject.org/schemas/Address.schema.json" },

...

}

To avoid the referenced schema being looked up online, we can place a copy in a local folder under a domain root. The schema should have a

matching $id property:

{

"$id": "https://myproject.org/schemas/Address.schema.json",

...

}

In addition, we need to tell the validator to load local schemas from the local folder:

...

# Set the local folder as the root of all locally mapped schemas.

validator.referencedSchemas = local

The validator.referencedSchemas property provides a flexible way of defining a cache of schemas, serving to either

reuse schemas in others, or to avoid online lookups.

Supporting options per validation type

The different types of validation supported by the validator (enumerated using property validator.type) determine the different

kinds of validation that your users may select. Available types are listed in the validator’s web user interface

in a dropdown list, and need to be provided as input when executing a validation.

It could be the case that your validator needs to support an extra level of granularity over the validation types. This would apply if each validation type has itself a set of additional options that actually define the specific validation to take place. For example, a validator for a specification defining rules for different types of data structures, may need to also allow users to select the desired version number. In this case we would define:

As validation types, the specification’s foreseen data structures.

As validation type options, the version numbers for each data structure.

Configuring such options can greatly simplify a validator’s configuration given that certain common data needs to be defined only once. In addition, the validator’s user interface becomes much more intuitive by listing two dropdowns in place of one: the first one to select the validation type, and the second one to select it’s specific option. The alternative, simply configuring all combinations as separate validation types, would render the validator less intuitive and more difficult to maintain.

Options are defined per validation type using validator.typeOptions.TYPE properties, for which the applicable options

are defined as a string with comma-separated values. Once options are defined, most configuration properties that are specific

to validation types now consider the full type as TYPE.OPTION (type followed by option and separated by .).

In terms of defining labels for options we can use:

validator.typeOptionLabel.TYPE.OPTION, for the label of an option specific to a given validation type.validator.optionLabel.OPTION, for the label of an option that is the same across types.validator.completeTypeOptionLabel.TYPE.OPTION, for a label to better express the combination of type plus option.

Revisiting our EU Purchase Order example we could add support for specification versions by configuring properties as follows (we skip defining labels as the option value suffices):

# Validation types

validator.type = basic, large

validator.typeLabel.basic = Basic purchase order

validator.typeLabel.large = Large purchase order

# Options

validator.typeOptions.basic = v1.2.0, v1.1.0, v1.0.0

validator.typeOptions.large = v1.1.0, v1.0.0

# Validation artefacts

validator.schemaFile.basic.v1.2.0 = schemas/v1.2.0/PurchaseOrder.schema.json

validator.schemaFile.basic.v1.1.0 = schemas/v1.1.0/PurchaseOrder.schema.json

validator.schemaFile.basic.v1.0.0 = schemas/v1.0.0/PurchaseOrder.schema.json

validator.schemaFile.large.v1.1.0 = schemas/v1.1.0/PurchaseOrder.schema.json, schemas/v1.1.0/PurchaseOrder-large.schema.json

validator.schemaFile.large.v1.0.0 = schemas/v1.0.0/PurchaseOrder.schema.json, schemas/v1.0.0/PurchaseOrder-large.schema.json

Note

The configuration property reference specifies per property whether it expects the validation type, option or full type (validation type plus option) as part of its definition.

Presenting validation types in groups

Similar to supporting validation type options you can also add further organisation to your proposed validation types by means of validation type groups. These groups apply to the validator’s web user interface by presenting your validation types in separate sets. Such sets could refer to different families of specifications, different solutions, or anything basically that has a grouping meaning in the context of your validator. Configuring groups has no effect on how validation artefacts are set up, nor on other properties that apply to specific validation types.

To define groups you include in your configuration one or more validator.typeGroup.GROUP entries, set to the list of

validation types the group includes. You may also provide a user-friendly name for each group through validator.typeGroupLabel.GROUP

properties.

Given that the groups’ purpose is specific to your validator, you also have several options on how these are presented. Groups can be displayed as:

Inline elements included as option groups in the validation types’ dropdown list.

A separate dropdown list presented as a selection step before selecting a validation type.

To specify the groups’ presentation approach you define property validator.typeGroupPresentation, set as

inline (the default, presenting groups within the validation type dropdown list), or split (presenting groups in

a separate dropdown). In the latter case you would typically also want to override the label of the groups’ dropdown list

through property validator.label.typeGroupLabel (the default label being “Group”).

Revisiting our EU Purchase Order example we could include groups to split the available types in “production” and “development” modes, the latter including an “experimental” configuration. The following properties illustrate how this could be achieved:

...

validator.type = basic, large, experimental

validator.typeOptions.basic = v2.1.0, v2.0.0, v1.2.0, v1.1.0

validator.typeOptions.large = v2.1.0, v2.0.0

# Define 'prod' and 'dev' groups.

validator.typeGroup.prod = basic, large

validator.typeGroup.dev = experimental

# Label the groups accordingly.

validator.typeGroupLabel.prod = Production

validator.typeGroupLabel.dev = Development

# Present a separate dropdown with the groups (as opposed to inline).

validator.typeGroupPresentation = split

# Override the groups' dropdown label.

validator.label.typeGroupLabel = Validation mode

Note

When using groups all validation types must be mapped to groups otherwise the domain’s configuration is considered invalid.

Validation type aliases

Validation type aliases are alternative ways of referring to the configured validation types. They become meaningful when users refer directly to specific types, such as when using the validator’s REST API, SOAP API or REST API. Typical use cases for aliases would be:

To define an additional “latest” alias that always points to the latest version of your specifications.

To enable backwards compatibility when validation types are reorganised in a configuration update.

To define a validator alias add one or more validator.typeAlias.ALIAS properties where ALIAS is the alias you want to define. As the value of the property you set the target validation type.

Note

Validator aliases refer to full validation types, meaning the combination of validation type and option (TYPE.OPTION).

As an example consider the following configuration:

validator.type = basic, large, preview

validator.typeOptions.basic = v2.1.0, v2.0.0

validator.typeOptions.large = v2.1.0, v2.0.0

The available full validation types based on these properties are basic.v2.1.0, basic.v2.0.0, large.v2.1.0, large.v2.0.0 and preview.

Based on this example we can consider that you may want to add aliases named basic_latest and large_latest for the latest versions of each supported profile. To do so extend your configuration with the following properties:

validator.typeAlias.basic_latest = basic.v2.1.0

validator.typeAlias.large_latest = large.v2.1.0

Doing so you allow clients of your APIs that are interested in always validating against the latest specifications, to do so by referring to these aliases. Otherwise, if new versions where introduced they would need to adapt their implementation.

Domain aliases

Domain aliases are similar in concept to validation type aliases in that they allow you to adapt the validation to carry out based on the user’s request. Simply put, a user requests validation A and you internally carry out validation B.

Domain aliases however, have a a fundamentally different purpose. They are used to delegate requests to a completely separate validator configuration, defined as a separate domain. This means that not only requested validation types are adapted, but also that the complete validator configuration is loaded from the aliased domain. In this case, if domain A is defined as an alias for domain B, any requests to domain A are effectively redirected to domain B. This is not an actual web redirection mind you, although practically the result is similar.

The main reason to define an alias for a domain is to cover validator migrations and consolidations. This typically becomes interesting if you have defined several distinct validators over time, but want to now consolidate all these into a single one to provide a unified user experience. Aggregating validation artefacts into a single validator is easily achieved and then exposing them nicely organised using groups, validation types and options.

While creating the consolidated validator is straightforward, you need to consider the existing users of the now legacy validator domains. This is where aliases come in, as they allow continued use of the legacy domains, while transparently delegating to the correct, consolidated, one. Importantly, this delegation covers all validator APIs (web user interface, REST API and SOAP API).

A domain alias is defined using the validator.domainAlias property, set with the name of the domain to delegate to.

Complementing this, you can also define a set of validator.domainAlias.TYPE properties so that you can map your current

validation types to their equivalent types in the target domain. Note that the TYPE postfix added to these properties

must be the full validation type (validation type and option separated by a dot .), pointing similarly to the

full validation type or an alias in the target domain. Mappings can

be omitted for validation types having the same identifier in both the current and target domains.

Note

In case validation types of the current domain cannot be fully mapped to the target, the entire domain alias configuration is ignored and the validator outputs relevant warnings to its log.

To illustrate how domain aliases work with an example, let’s revisit our fictional purchase order validator, defined

through an order domain with a configuration as follows:

# Title

validator.uploadTitle = Purchase Order Validator

# Validation types

validator.type = basic, large

# Options per type.

validator.typeOptions.basic = v1.1.0, v1.0.0

validator.typeOptions.large = v1.1.0, v1.0.0

# Validation artefact mappings

validator.schemaFile.basic.v1.1.0 = schemas/PurchaseOrder_v1.1.0.json

validator.schemaFile.basic.v1.0.0 = schemas/PurchaseOrder_v1.0.0.json

validator.schemaFile.large.v1.1.0 = schemas/PurchaseOrder_v1.1.0.json

validator.schemaFile.large.v1.0.0 = schemas/PurchaseOrder_v1.0.0.json

Now consider that alongside the purchase order validator you have also defined an invoice validator in a separate invoice domain:

# Title

validator.uploadTitle = Invoice Validator

# Validation types

validator.type = full, summary

# Validation artefact mappings

validator.schemaFile.full = schemas/Invoice.json

validator.schemaFile.summary = schemas/Invoice.json

In terms of filesystem configuration for the order and invoice domains, the validator’s resources are defined as follows:

validator

└── resources

├── order

│ ├── schemas

│ │ ├── PurchaseOrder_v1.1.0.json

│ │ └── PurchaseOrder_v1.0.0.json

│ └── config.properties

└── invoice

├── schemas

│ └── Invoice.json

└── config.properties

You now want to group both validators into a new, consolidated ecommerce validator, defined through an ecommerce domain.

This domain includes all purchase order and invoice artefacts, introducing groups

as a first selection for the document type to validate. The configuration file of this domain will be as follows:

# Title

validator.uploadTitle = eCommerce Validator

# Validation types

validator.type = order_basic, order_large, invoice_full, invoice_summary

# Options per purchase order type.

validator.typeOptions.order_basic = v1.1.0, v1.0.0

validator.typeOptions.order_large = v1.1.0, v1.0.0

# Validation type groups

validator.typeGroup.order = order_basic, order_large

validator.typeGroup.invoice = invoice_full, invoice_summary

validator.typeGroupLabel.order = Purchase order

validator.typeGroupLabel.invoice = Invoice

validator.typeGroupPresentation = split

validator.label.typeGroupLabel = Document type

# Validation artefact mappings for purchase orders

validator.schemaFile.order_basic.v1.1.0 = order/schemas/PurchaseOrder_v1.1.0.json

validator.schemaFile.order_basic.v1.0.0 = order/schemas/PurchaseOrder_v1.0.0.json

validator.schemaFile.order_large.v1.1.0 = order/schemas/PurchaseOrder_v1.1.0.json

validator.schemaFile.order_large.v1.0.0 = order/schemas/PurchaseOrder_v1.0.0.json

# Validation artefact mappings for invoices

validator.schemaFile.invoice_full = invoice/schemas/Invoice.json

validator.schemaFile.invoice_summary = invoice/schemas/Invoice.json

We will now revisit the purchase order and invoice validator configurations, to ensure existing users can seamlessly

transition to the new ecommerce validator. This is achieved by defining a domain alias per case. The order domain’s

configuration is extended as follows:

validator.uploadTitle = Purchase Order Validator

...

# Delegate to the ecommerce validator

validator.domainAlias = ecommerce

validator.domainAlias.basic.v1.1.0 = order_basic.v1.1.0

validator.domainAlias.basic.v1.0.0 = order_basic.v1.0.0

validator.domainAlias.large.v1.1.0 = order_large.v1.1.0

validator.domainAlias.large.v1.0.0 = order_large.v1.0.0

Similarly, the invoice domain’s configuration is extended as follows:

validator.uploadTitle = Invoice Validator

...

# Delegate to the ecommerce validator

validator.domainAlias = ecommerce

validator.domainAlias.full = invoice_full

validator.domainAlias.summary = invoice_summary

These alias definitions, ensure that any requests to the legacy validators will be delegated transparently to the new

consolidated domain. Revisiting the validator’s filesystem resources, the order, invoice and ecommerce domains

are defined as follows:

validator

└── resources

├── order

│ ├── schemas

│ │ ├── PurchaseOrder_v1.1.0.json

│ │ └── PurchaseOrder_v1.0.0.json

│ └── config.properties

├── invoice

│ ├── schemas

│ │ └── Invoice.json

│ └── config.properties

└── ecommerce

├── order

│ └── schemas

│ ├── PurchaseOrder_v1.1.0.json

│ └── PurchaseOrder_v1.0.0.json

├── invoice

│ └── schemas

│ └── Invoice.json

└── config.properties

If your validator is self-hosted, this is would be the updated resource root folder you would provide as its configuration. Alternatively, if your validators are hosted by the Interoperability Test Bed, you likely have separate repositories for each configuration. This is not an issue however, as all you need to do is ensure each domain is set up correctly and the alias definitions are in place.

Note

Domain aliases work when all domains are defined in the same validator application. If this is not the case, you will need another solution such as reverse proxy rewriting and/or redirects. As this approach is outside the control of validators they are outside the scope of this guide.

User-provided validation artefacts

Another available option on schema file configuration is to allow a given validation type to support user-provided schemas. Such schemas would be considered in

addition to any pre-configured local and remote schemas. Enabling user-provided schemas is achieved through the validator.externalSchemas property:

...

validator.externalSchemas.TYPE = required

These properties allow three possible values:

required: The relevant schema(s) must be provided by the user.optional: Providing the relevant schema(s) is allowed but not mandatory.none(the default value): No such schema(s) are requested or considered.

Specifying that for a given validation type you allow users to provide schemas will result in any such schemas being combined with your

pre-defined ones. This could be useful in scenarios where you want to define a common validation base but allow also ad-hoc extensions for e.g. restrictions

defined at user-level (e.g. National validation rules to consider in addition to a common set of EU rules). Similarly to pre-defined schemas,

you can also define the validator.externalSchemaCombinationApproach.TYPE with values allOf (the default), anyOf and oneOf to

specify how they are combined. Note that when you have pre-configured schemas and user-provided ones, these are validated separately based on the

defined combination semantics (properties validator.schemaFile.TYPE.combinationApproach and validator.externalSchemaCombinationApproach.TYPE)

but for an overall success both sets of schemas need to succeed.

Note

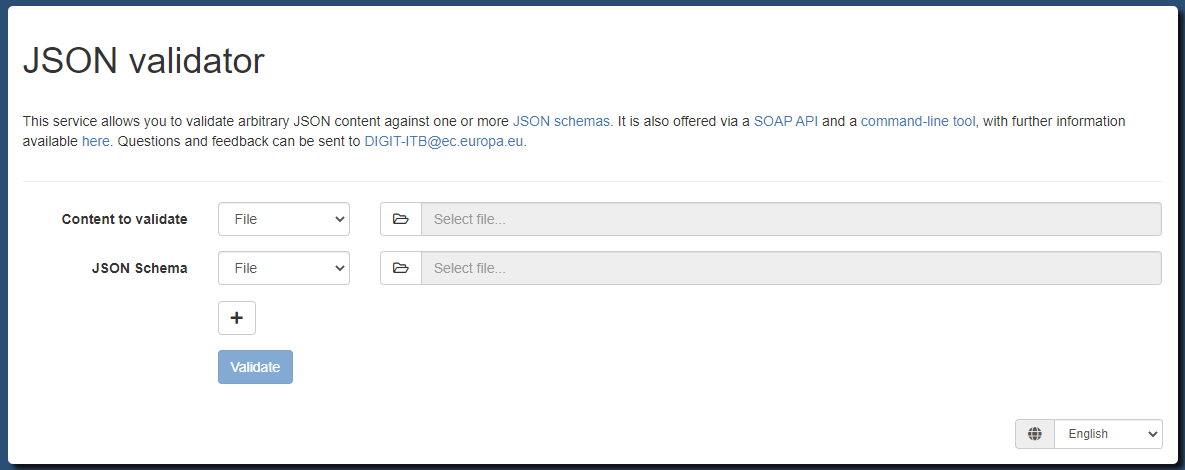

Generic validator: It is possible to not predefine any schemas resulting in a validator that is truly generic, expecting all schemas to be provided by users. Such a generic instance actually exists at https://www.itb.ec.europa.eu/json/any/upload. This generic validator will automatically be set up if you don’t specify validator configurations.

Input pre-processing

An advanced configuration option available to you is to enable for a given validation type the pre-processing of the validator’s input. Pre-processing allows you to execute a JSONPath query on the input in order to filter the part to be used for validation, rather than using the entire document. The typical use case for this is when the content of interest is wrapped in a container structure and validating the relevant parts of such structure suffices. In this case your input should be tailored towards your business payload and ignore the rest of the container structures to focus on the payload itself. Alternatively you may have separate validation types focusing on different aspects of the JSON document.

Pre-processing of the input can be configured in your validator by means of JSONPath - XPath for JSON,

applying such expressions for the validation types you need to. Once your validator receives the JSON input for a given validator type, it will check to see

whether a JSONPath expression is defined for that type to pre-process the input before validating. Configuring input pre-processing expressions is done

through validator.input.preprocessor.TYPE properties in your domain configuration file.

For example if you have JSON content such as the following:

{

"shipTo": {

"name": "John Doe",

"street": "Europa Avenue 123",

"city": "Brussels",

"zip": 1000

},

"billTo": {

"name": "Jane Doe",

"street": "Europa Avenue 210",

"city": "Brussels",

"zip": 1000

},

"orderDate": "2020-01-22",

"comment": "Send in one package please",

"items": [

{

"partNum": "XYZ-123876",

"productName": "Mouse",

"quantity": 20,

"priceEUR": 15.99,

"comment": "Confirm this is wireless"

},

{

"partNum": "ABC-32478",

"productName": "Keyboard",

"quantity": 15,

"priceEUR": 25.50

}

]

}

You could define different types of validation to focus on the billTo object or the complete document as follows:

...

validator.type = billing, full

...

# Expression to extract the header.

validator.input.preprocessor.billing = $.billTo

# No need to specify an expression for the "full" type as content will be validated as-is.

Supporting YAML input

Note

For a validator focused only on YAML you can also refer to our YAML validation guide.

YAML is a popular serialisation language used primarily to define configuration files. It is semantically equivalent to JSON and can thus be validated and processed using JSON Schema and JSON tools. The validator supports YAML as an input format allowing you to validate YAML alongside or instead of JSON content.

YAML support is by default disabled, but you can choose to either support or enforce it by means of one or more validator.yamlSupport.TYPE

properties, where TYPE is a placeholder for a full validation type (type plus option if options

are defined). You may also use the validator.yamlSupport property without a TYPE postfix, to define your

default level of support for YAML that you may then override for specific types. Both these properties support the following

values:

force: Only YAML input can be provided.support: Both YAML and JSON input can be provided.none(the default value): YAML input is not supported.

Revisiting our example specification, you may also want to allow validation of purchase orders in YAML format such as the following example:

---

shipTo:

name: John Doe

street: Europa Avenue 123

city: Brussels

zip: 1000

billTo:

name: Jane Doe

street: Europa Avenue 210

city: Brussels

zip: 1000

orderDate: '2020-01-22'

items:

- partNum: XYZ-123876

productName: Mouse

quantity: 20

priceEUR: 8.99

comment: Confirm this is wireless

- partNum: ABC-32478

productName: Keyboard

quantity: 5

priceEUR: 25.5

To support YAML alongside JSON for all validation types, adapt your configuration as follows:

...

# Support YAML input for all validation types.

validator.yamlSupport = support

Alternatively if you defined a validation type specifically for YAML and want to prevent its use elsewhere, your configuration would be similar to the following:

validation.type = basic, large, largeYaml

...

# Prevent YAML input by default (note, this is also the default setting).

validator.yamlSupport = none

# Enforce the use of YAML for a specific validation type.

validator.yamlSupport.largeYaml = force

Supporting multiple languages

Certain configuration properties we have seen up to now define texts that are visible to the validator’s users. Examples of these include the title of

the validator’s user interface (validator.uploadTitle) or the labels to present for the available validation types (validator.typeLabel.TYPE),

which in the sample configuration are set with English values. Depending on your validator’s audience you

may want to switch to a different language or support several languages at the same time. Supporting multiple languages affects:

The texts, labels and messages presented on the validator’s user interface.

The reports produced after validating content via any of the validator’s interfaces.

The text values used by default by the validator are defined in English (see default values here), with English being the language considered by the validator if no other is selected. If your validator needs to support only a single language, a simple approach is to ensure that the domain-level configuration properties for texts presented to users are defined in the domain configuration file with the values for your selected language. Note that as long as the validator’s target language is an EU official language you need not provide translations for user interface labels and messages as these are defined by the validator itself. You are nonetheless free to redefine these to override the defaults or to define them for a non-supported language.

In case you want your validator to support multiple languages at the same time you need to adapt your configuration to define the supported languages and their specific translations. To do this adapt your domain configuration property file making use of the following properties:

validator.locale.available: The list of languages to be supported by the validator, provided as a comma-separated list of language codes (locales). The order these are defined with determines their presentation order in the validator’s user interface.validator.locale.default: The validator’s default language, considered if no specific language has been requested. If multiple languages are supported the default needs to be set to one of these.validator.locale.translations: The path to a folder (absolute or relative to the domain configuration file) that contains the translation property files for the validator’s supported languages.

Each language (locale) is defined as a 2-character lowercase language code (based on ISO 639), followed by an optional 2-character uppercase country code (based on ISO 3166)

separated using an underscore character (_). The format is in fact identical to that used to define locales in the Java language. Valid examples include “fr” for French,

“fr_FR” for French in France, or “fr_BE” for French in Belgium. Such language codes are the values expected to be used for properties validator.locale.available and

validator.locale.default.

Regarding property validator.locale.translations, the value is expected to be a folder containing the translation files for your selected languages. These are defined

exactly as you would define a resource bundle in a Java program, specifically:

The names of all property files start with the same prefix. Any value can be selected with good examples being “labels” or “translations”.

The common prefix is followed by the relevant locale value (language code and country code) separated with an underscore.

The files’ extension is set as “.properties”.

Considering the above, good examples of translation property file names would be “labels_de.properties”, “labels_fr.properties” and “labels_fr_FR.properties”. Note that these files are implicitly hierarchical meaning that for related locales you need not redefine all properties. For example you may have your French texts defined in “labels_fr.properties” and only override what you need in “labels_fr_BE.properties”. You can also define an overall default translation file by omitting the locale in its name (labels.properties) which will be used when no locale-specific file exists or if it exists but does not include the given property key. Note additionally that if you define translatable text values in your main domain configuration file these are considered as overall defaults if no specific translations could be found in translation files.

In terms of contents, the translation files are simple property files including key-value pairs. Each such pair defines as its key the property key for the given text, with the value being the translation to use. The properties that can be defined in such files are:

Any domain-level configuration properties that are marked as being a translatable String.

Any user interface labels and messages if you want to override the default translations.

Considering that you typically wouldn’t need to override labels and messages, the texts you would need to translate are the ones relevant to your specification. These are most often the following:

The title of the validator’s UI (

validator.uploadTitle).The UI’s HTML banner content (

validator.bannerHtml), which can be customised as explained in Adding a custom banner and footer.The descriptions for the validation types that you define and their options (

validator.typeLabel.TYPE,validator.optionLabel.OPTION,validator.typeOptionLabel.TYPE.OPTIONandvalidator.completeTypeOptionLabel.TYPE.OPTION).

To illustrate how all this comes together let’s revisit our Purchase Order example. In our current, single-language and English-only setup, the configuration files are structured as follows:

validator

└── resources

└── order

├── schemas

│ ├── PurchaseOrder.schema.json

│ └── PurchaseOrder-large.schema.json

└── config.properties

The domain configuration file (config.properties) defines itself the user-presented texts (see highlighted lines):

validator.type = basic, large

validator.typeLabel.basic = Basic purchase order

validator.typeLabel.large = Large purchase order

validator.schemaFile.basic = schemas/PurchaseOrder.schema.json

validator.schemaFile.large = schemas/PurchaseOrder.schema.json, schemas/PurchaseOrder-large.schema.json

validator.uploadTitle = Purchase Order Validator

Starting from this point we will make the necessary changes to support alongside English (which remains the default language), German and French translations. The first

step is to adapt the config.properties file to remove the contained translations. We could have kept these here for English but as we will be adding specific translation

files it is cleaner to move all translations to them. The content of config.properties becomes now as follows:

validator.type = basic, large

validator.schemaFile.basic = schemas/PurchaseOrder.schema.json

validator.schemaFile.large = schemas/PurchaseOrder.schema.json, schemas/PurchaseOrder-large.schema.json

validator.locale.available = en,fr,de

validator.locale.default = en

validator.locale.translations = translations

To define the translations we will introduce a new folder translations (as defined in property validator.locale.translations) that includes the property files per locale:

validator

└── resources

└── order

├── schemas

│ ├── PurchaseOrder.schema.json

│ └── PurchaseOrder-large.schema.json

├── translations

│ ├── labels_en.properties

│ ├── labels_fr.properties

│ └── labels_de.properties

└── config.properties

The English translations are provided in labels_en.properties (these are simply moved here from the config.properties file):

validator.typeLabel.basic = Basic purchase order

validator.typeLabel.large = Large purchase order

validator.uploadTitle = Purchase Order Validator

French translations are defined in labels_fr.properties:

validator.typeLabel.basic = Bon de commande de base

validator.typeLabel.large = Bon de commande important

validator.uploadTitle = Validateur de bon de commande

And finally German translations are defined in labels_de.properties:

validator.typeLabel.basic = Grundbestellung

validator.typeLabel.large = Großbestellung

validator.uploadTitle = Bestellbestätigung

This completes the validator’s localisation configuration. With this setup in place, the user will be able to select one of the supported languages to change the validator’s user interface and resulting report. Note that localised reports can also now be produced from the validator’s REST API, SOAP API and command-line tool.

Validation metadata in reports

The machine-processable report produced when calling the validator via its SOAP API, REST API, or downloaded from its user interface, uses the GITB Test Reporting Language (TRL). The GITB TRL is an XML format, but when using the REST API in particular, it may also be generated in JSON.

Apart from defining the report’s main content, the GITB TRL format foresees optional metadata to provide information on the validation service itself and the type of validation applied. Specifically:

An identifier and name for the report.

A name and version for the validator.

The validation profile considered as well as any type-specific customisation.

The inclusion of all such properties is driven through your domain configuration file. The report and validator metadata are optional fixed values that you may configure to apply to all produced reports. The validation profile and its customisation however, apart from also supporting overall default values, can furthermore be set with values depending on configured validation types. If nothing is configured, the only metadata included by default is the profile, that is set to the validation type that was considered to carry out the validation (selected by the user, or implicit if there is a single defined type or a default).

The following table summarises the available report metadata, the relevant configuration properties and their configuration approach:

Report element |

Configuration property |

Description |

|---|---|---|

|

|

Identifier for the overall report, set as a string value. |

|

|

Name for the overall report, set as a string value. |

|

|

Name for the validator, set as a string value. |

|

|

Version for the validator, set as a string value. |

|

|

The applied profile (validation type). Multiple entries can be added for configured validation types added as a postfix. When defined without a postfix the value is considered as an overall default. If entirely missing this is set to the applied validation type. |

|

|

A customisation of the applied profile. Multiple entries can be added for configured validation types added as a postfix. When defined without a postfix the value is considered as an overall default. |

To illustrate the above properties consider first the following XML report metadata, produced by default if no relevant configuration is provided. Only the profile is included, set to the validation type that was used:

<TestStepReport>

...

<overview>

<profileID>basic</profileID>

</overview>

...

</TestStepReport>

Extending now our domain configuration, we can include additional metadata as follows:

# A name to display for the validator.

validator.report.validationServiceName=Purchase Order Validator

# A version to display for the validator.

validator.report.validationServiceVersion=v1.0.0

# The name for the overall report.

validator.report.name=Purchase order validation report

# The profile to display depending on the selected validation type (basic or large).

validator.report.profileId.basic=Basic purchase order

validator.report.profileId.large=Large purchase order

Applying the above configuration will result in GITB TRL reports produced with the following metadata included:

<TestStepReport name="Purchase order validation report">

...

<overview>

<profileID>basic</profileID>

<validationServiceName>Purchase Order Validator</validationServiceName>

<validationServiceVersion>v1.0.0</validationServiceVersion>

</overview>

...

</TestStepReport>

In a JSON report produced by the validator’s REST API the metadata would be included as follows:

{

...

"overview": {

"profileID": "Basic purchase order",

"validationServiceName": "Purchase Order Validator",

"validationServiceVersion": "v1.0.0"

},

...

"name": "Purchase order validation report"

}

Rich text support in report items

A validation report’s items represent the findings of a given validation run. The description of report items is by default treated as simple text and displayed as such in all report outputs. If this description includes rich text (i.e. HTML) content, the validator’s user interface will escape and display it as-is without rendering it.

It is possible to configure your validator to expect report items with descriptions including rich text, and specifically HTML links (anchor elements). If enabled, links will be rendered as such in the validator’s user interface and PDF reports, so that when clicked, their target is opened in a separate window. A typical use case for this would be to link each reported finding with online documentation that provides further information or a normative reference.

To enable HTML links in report items set property validator.richTextReports to true as part of your

domain configuration properties.

validator.richTextReports = true

It is important to note that with this feature enabled, the description of report items is sanitised to remove any rich content that is not specifically a link. If found, non-link HTML tags are stripped from descriptions, leaving only their contained text (if present).

Step 4: Setup validator as Docker container

Note

When to setup a Docker container: The purpose of setting up your validator as a Docker container is to host it yourself or run it locally on workstations. If you prefer or don’t mind the validator being accessible over the Internet it is simpler to delegate hosting to the Test Bed team by reusing the Test Bed’s infrastructure. If this is the case skip this section and go directly to Step 5: Setup validator on Test Bed. Note however that even if you opt for a validator managed by the Test Bed, it may still be interesting to create a Docker image for development purposes (e.g. to test new validation artefact versions) or to make available to your users as a complementary service (i.e. use online or download and run locally).

Once the validator’s configuration is ready (configuration file and validation artefacts) you can proceed to create a Docker image.

The configuration for your image is driven by means of a Dockerfile. Create this file in the validator folder with the following

contents:

FROM isaitb/json-validator:latest

COPY resources /validator/resources/

ENV validator.resourceRoot /validator/resources/

This Dockerfile represents the most simple Docker configuration you can provide for the validator. Let’s analyse each line:

|

This tells Docker that your image will be built over the latest version of the Test Bed’s |

|

This copies your |

|

This instructs the validator that it should consider as the root of all its configuration resources the |

The contents of the validator folder should now be as follows:

validator

├── resources

│ └── order

│ ├── shapes

│ │ ├── PurchaseOrder.schema.json

│ │ └── PurchaseOrder-large.schema.json

│ └── config.properties

└── Dockerfile

That’s it. To build the Docker image open a command prompt to the validator folder and issue:

docker build -t po-validator .

This command will create a new local Docker image named po-validator based on the Dockerfile it finds in the current directory.

It will proceed to download missing images (e.g. the isaitb/json-validator:latest image) and eventually print the following

output:

Sending build context to Docker daemon 32.77kB

Step 1/3 : FROM isaitb/json-validator:latest

---> 39ccf8d64a50

Step 2/3 : COPY resources /validator/resources/

---> 66b718872b8e

Step 3/3 : ENV validator.resourceRoot /validator/resources/

---> Running in d80d38531e11

Removing intermediate container d80d38531e11

---> 175eebf4f59c

Successfully built 175eebf4f59c

Successfully tagged po-validator:latest

The new po-validator:latest image can now be pushed to a local Docker registry or to the Docker Hub. In our case we will proceed

directly to run this as follows:

docker run -d --name po-validator -p 8080:8080 po-validator:latest

This command will create a new container named po-validator based on the po-validator:latest image you just built. It is set

to run in the background (-d) and expose its internal listen port through the Docker machine (-p 8080:8080). Note that by default

the listen port of the container (which you can map to any available host port) is 8080.

Your validator is now online and ready to validate JSON content. If you want to try it out immediately skip to Step 6: Use the validator. Otherwise, read on to see additional configuration options for the image.

Running without a custom Docker image

The discussed approach involved building a custom Docker image for your validator. Doing so allows you to run the validator yourself but also potentially push it to a public registry such as the Docker Hub. This would then allow anyone else to pull it and run a self-contained copy of your validator.

If such a use case is not important for you, or if you want to only use Docker for your local artefact development, you could also skip

creating a custom image and use the base isaitb/json-validator image directly. To do so you would need to:

Define a named or unnamed Docker volume pointing to your configuration files.

Run your container by configuring it with the volume.

Considering the same file structure of the /validator folder you can launch your validator using an unnamed volume as follows:

docker run -d --name po-validator -p 8080:8080 \

-v /validator/resources:/validator/resources/ \

-e validator.resourceRoot=/validator/resources/ \

isaitb/json-validator

As you see here we create the validator directly from the base image and pass it as a volume our resource root folder. When doing so you need to also make sure

that the validator.resourceRoot environment variable is set to the path within the container.

Using this approach to run your validator has the drawback of being unable to share it as-is with other users. The benefit however is one of simplicity given that there is no need to build intermediate images. As such, updating the validator for configuration changes means that you only need to restart your container.

Note

Running the default docker image can also be done without providing a validator.resourceRoot. If you decide to do this, a generic instance with the any validator

will automatically be set-up for you and you will be able to access it on http://localhost:8080/json/any/upload.

Configuring additional validation domains

Up to this point you have configured validation for purchase orders which defines one or more validation types (basic and large).

This configuration can be extended by providing additional types to reflect:

Additional profiles with different business rules (e.g.

minimal).Specification versions (e.g.

basic_v1.0,large_v1.0,basic_v1.1_beta).Other types of content that are linked to purchase orders (e.g.

purchase_order_basic_v1.0andorder_receipt_v1.0).

All such extensions would involve defining potentially additional validation artefacts and updating the config.properties file

accordingly.

Apart from extending the validation possibilities linked to purchase orders you may want to configure a completely separate validator to address an unrelated specification that would most likely not be aimed to the same user community. To do so you have two options:

Repeat the previous steps to define a separate configuration and a separate Docker image. In this case you would be running two separate containers that are fully independent.

Reuse your existing validator instance to define a new validation domain. The result will be two validation services that are logically separate but are running as part of a single validator instance.

The rationale behind the second option is simply one of required resources. If you are part of an organisation that needs to support validation for dozens of different types of JSON content that are unrelated, it would probably be preferable to have a single application to host rather than one per specification.

Note

Sharing artefact files accross domains: Setting the application property validator.restrictResourcesToDomain to false allows to

add paths of validation artefacts that are outside of the domain root folder. This enables sharing artefacts between different domains.

In your current single domain setup, the purchase order configuration is reflected through folder order. The name of this folder

is also by default assumed to match the name of the domain. A new domain could be named invoice that is linked to JSON invoices.

This is represented by an invoice folder next to order that contains similarly its validation artefacts and domain-level

configuration property file. Considering this new domain, the contents of the validator folder would be as follows:

validator

├── resources

│ ├── invoice

│ │ └── (Further contents skipped)

│ └── order

│ └── (Further contents skipped)

└── Dockerfile

If you were now to rebuild the validator’s Docker image this would setup two logically-separate validation domains (invoice and

order).

Note

Validation domains vs types: In almost all scenarios you should be able to address your validation needs by having a single validation domain with multiple validation types. Validation types under the same domain will all be presented as options for users. Splitting in domains would make sense if you don’t want the users of one domain to see the supported validation types of other domains.

Important: Support for such configuration is only possible if you are defining your own validator as a Docker image. If you plan to use the Test Bed’s shared validator instance (see Step 5: Setup validator on Test Bed), your configuration needs to be limited to a single domain. Note of course that if you need additional domains you can in this case simply repeat the configuration process multiple times.

Additional configuration options

We have seen up to now that configuring how validation takes place is achieved through domain-level configuration properties

provided in the domain configuration file (file config.properties in our example). When setting up the validator as a

Docker image you may also make use of application-level configuration properties

to adapt the overall validator’s operation. Such configuration properties are provided as environment variables through ENV

directives in the Dockerfile.

We already saw this when defining the validator.resourceRoot property that is the only mandatory property for which no

default exists. Other such properties that you may choose to override are:

validator.domain: A comma-separated list of names that are to be loaded as the validator’s domains. By default the validator scans the providedvalidator.resourceRootfolder and selects as domains all subfolders that contain a configuration property file (folderorderin our case). You may want to configure the list of folder names to consider if you want to ensure that other folders get ignored.validator.domainName.DOMAIN: A mapping for a domain (replacing theDOMAINplaceholder) that defines the name that should be presented to users. This would be useful if the folder name itself (orderin our example) is not appropriate (e.g. if the folder was namedfiles).validator.rateLimit.*: A series of properties that allow you to configure validation rate limits per client IP address and requested endpoint.

The following example Dockerfile illustrates use of these properties. The values set correspond to the applied defaults so the resulting Docker images from this Dockerfile and the original one (see Step 4: Setup validator as Docker container) are in fact identical:

FROM isaitb/json-validator:latest

COPY resources /validator/resources/

ENV validator.resourceRoot /validator/resources/

ENV validator.domain order

ENV validator.domainName.order order

In case you want to configure validation rate limits for your validator (per validator instance), the following configuration shows an example setup:

# Enable validation rate limits (by default no limits are enforced).

ENV validator.rateLimit.enabled true

# When a limit is exceeded return a 429 "Too Many Requests" response (including a Retry-After header).

ENV validator.rateLimit.warnOnly false

# Read the client's IP address from the X-Real-IP (in case the validator is proxied).

ENV validator.rateLimit.ipHeader X-Real-IP

# Apply limits per minute of 100 for the UI, 500 for the REST API, 50 for the bulk REST API, and 400 for the SOAP API.

ENV validator.rateLimit.capacity.uiValidate 100

ENV validator.rateLimit.capacity.restValidate 500

ENV validator.rateLimit.capacity.restValidateMultiple 50

ENV validator.rateLimit.capacity.soapValidate 400

See Application-level configuration for the full list of supported application-level properties.

Finally, it may be the case that you need to adapt further configuration properties that relate to how the validator’s

application is ran. The validator is built as a Spring Boot application which means that you can override all

configuration properties by means of environment variables. This is rarely needed as you can achieve most important

configuration through the way you run the Docker container (e.g. defining port mappings). Nonetheless the following

adapted Dockerfile shows how you could ensure the validator’s application starts up on another port (9090) and

uses a specific context path (/ctx).

FROM isaitb/json-validator:latest

COPY resources /validator/resources/

ENV validator.resourceRoot /validator/resources/

ENV server.servlet.context-path /ctx

ENV server.port 9090

Note

Custom port: Even if you define the server.port property to a different value other than the default 8080

this remains internal to the Docker container. The port through which you access the validator will be the one you

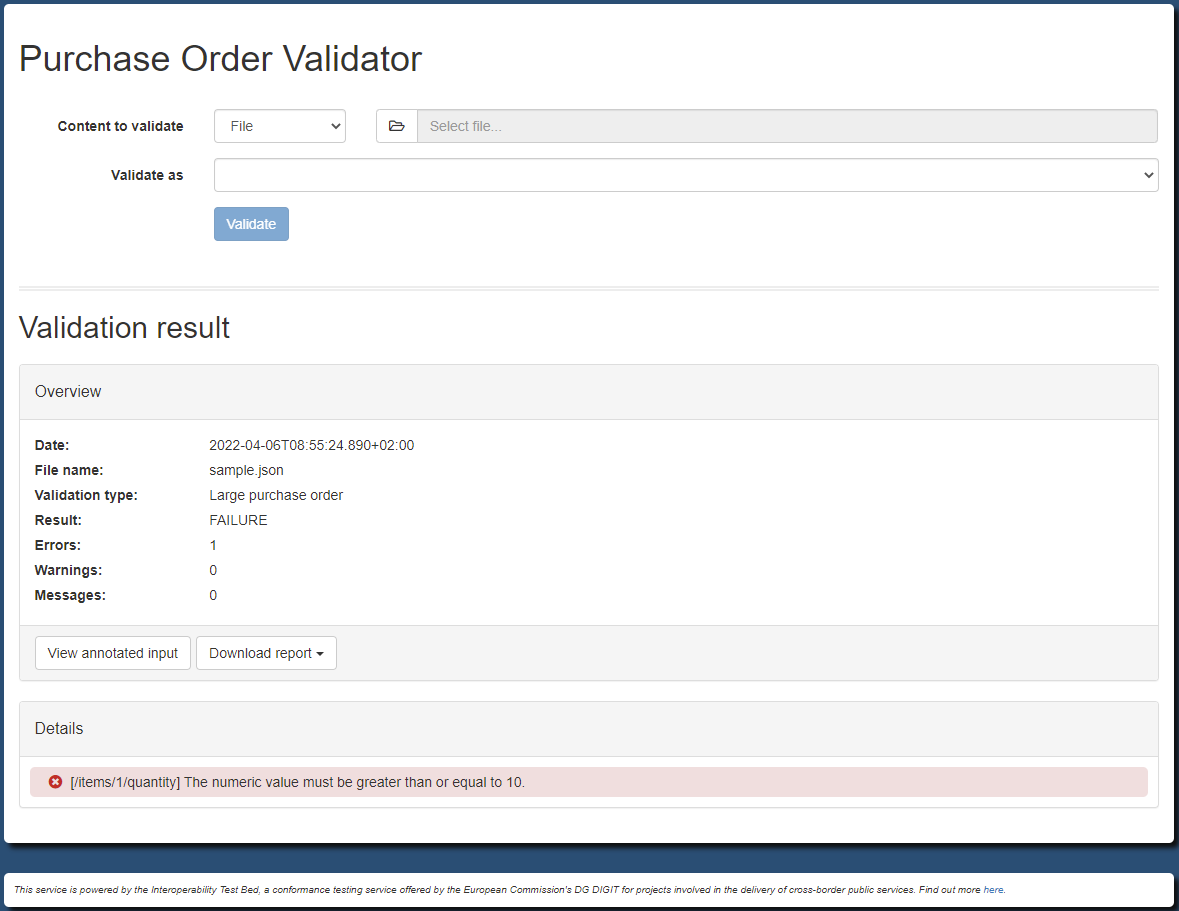

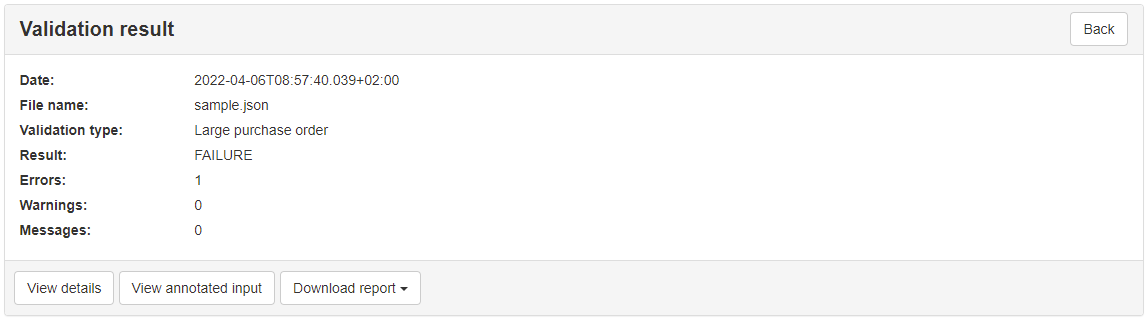

map on your host through the -p flag of the docker run command.