Guide: Governance interoperability design and conformance testing

Track |

|---|

About this guide

This guide focuses on a solution’s governance, discussing design guidelines to enable interoperability at a governance level across its stakeholders. For each considered topic the guide also offers tips on covering such governance aspects as part of your conformance testing setup.

What you will achieve

At the end of this guide you should have an improved awareness over a solution’s governance aspects, and the agreements involved in ensuring interoperability at a governance level for the involved implementing parties. For each discussed governance topic you will be presented with design guidelines sourced from best-in-class standards and specifications, and see which parts of such agreements you can practically cover as part of your conformance testing design.

If you are interested in more details on the background and sources behind this guide, you will also find a summary of the European Interoperability Reference Architecture (EIRA©) and the EIRA© Library of Interoperability Specifications (ELIS) that you can proceed to further explore.

Note

The discussed topics and guidelines in this guide are not meant to be exhaustive. They represent common elements per topic sourced from the standards and specifications included in the ELIS.

What you will need

About 2 hours to go through all points.

A high-level understanding of IT concepts such as encryption and APIs. Note that no technical details are discussed, nor is there need to have a technical profile to follow the guide.

(Optional) Check the Test Bed’s developer onboarding guide to get a high-level understanding of Test Bed technical concepts. Although not necessary to follow the guide, such concepts are often mentioned when discussing conformance testing guidelines.

How to complete this guide

The first chapter of this guide covers background concepts, and explains the guide’s rationale and approach. Although interesting, you may choose to skip this and go directly to the subsequent sections discussing legal, organisational, semantic and technical governance topics.

Each of these chapters is further split into topics to group together similar aspects. Within each topic section you will find the same structure, presenting first design guidelines for the topic in question, followed by conformance testing tips. To make each of these distinct and clear, they are presented in separate highlight boxes.

Design guidelines

Listing of design guidelines for the discussed topic

Conformance testing tips

Proposals on what can be tested and how to implement the resulting tests

Background

The sections in this chapter provide background information on governance interoperability, and the approach followed by this guide to extract design and testing guidelines.

The European Interoperability Reference Architecture (EIRA©)

The European Interoperability Reference Architecture (EIRA©) is a reference architecture to implement interoperable European digital public services. It extends the ArchiMate® enterprise architecture modelling language, reusing ArchiMate® elements to define the key architecture building blocks (ABBs) needed to build interoperable e-Government systems. These building blocks are furthermore organised in four views as defined by the European Interoperability Framework.

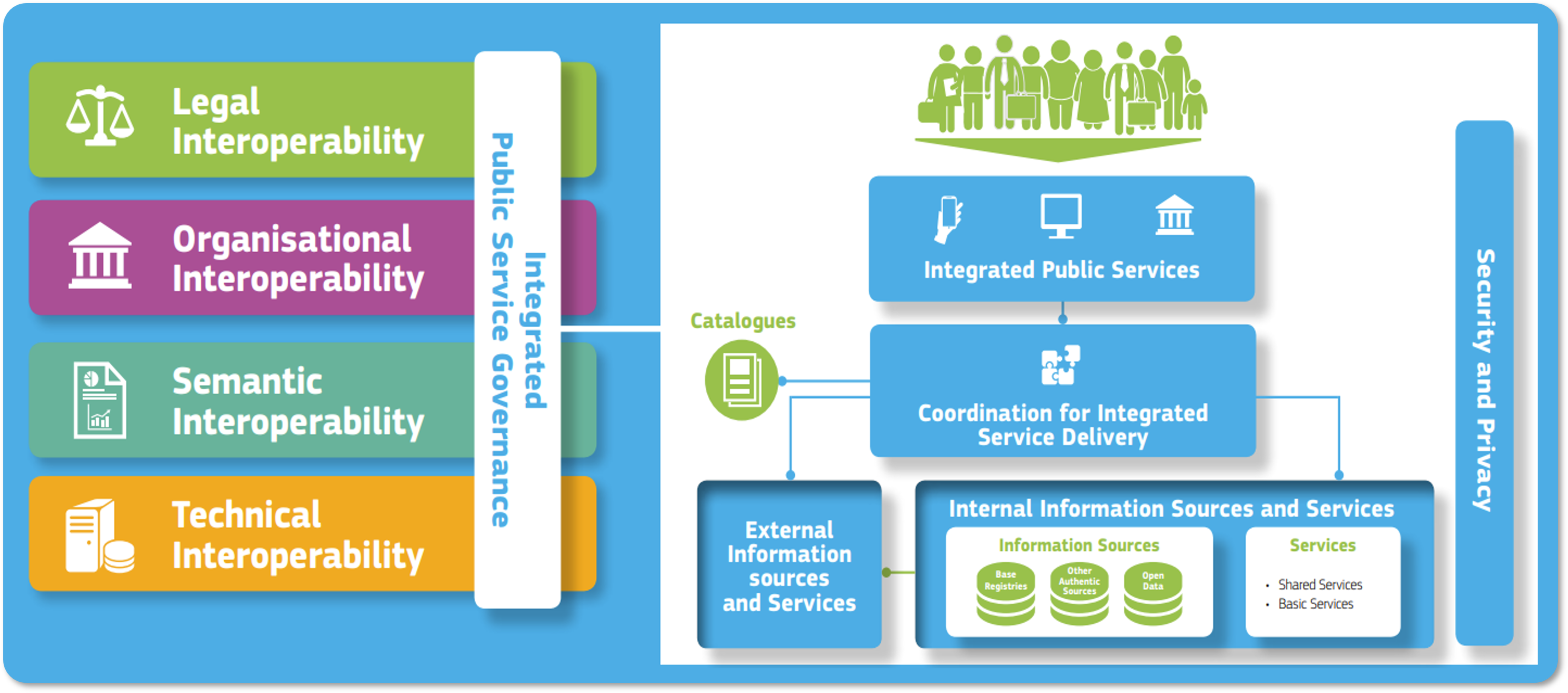

Figure 1: Interoperability views defined by the European Interoperability Framework

These views define the structure of the EIRA©, serving to classify a digital public service’s architecture building blocks based on their purpose. Specifically:

The Legal view building blocks cover its legal basis and motivation.

The Organisational view building blocks capture its organisational structure.

The Semantic view building blocks define its aspects pertaining to data and metadata.

The Technical view building blocks define its technical application and infrastructure elements.

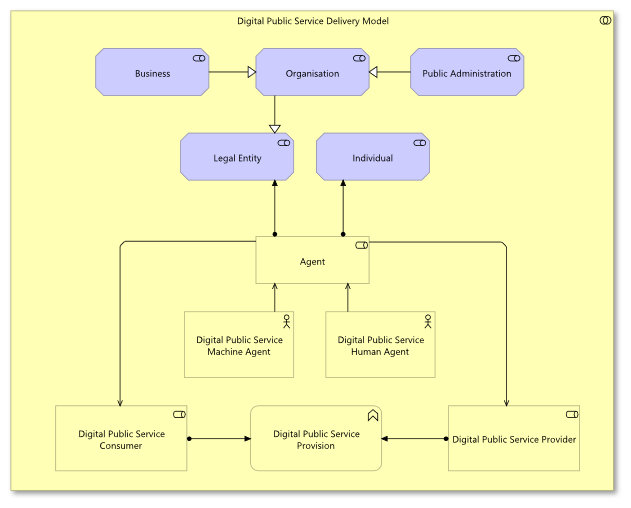

Figure 2: Organisational view excerpt showing ABBs related to service delivery (EIRA© v6.1.0)

Architecture building blocks are supported by rich definitions, rendering them a common terminology that can be used by administrators, architects and developers. Furthermore, the building blocks’ interconnection through the Legal, Organisational, Semantic and Technical views results in an implicit narrative that links all elements, and enables traceability within and across different layers. As an example, one can use the EIRA© to trace the legal basis for specific application components, and the reason why they are deployed in a specific hosting environment.

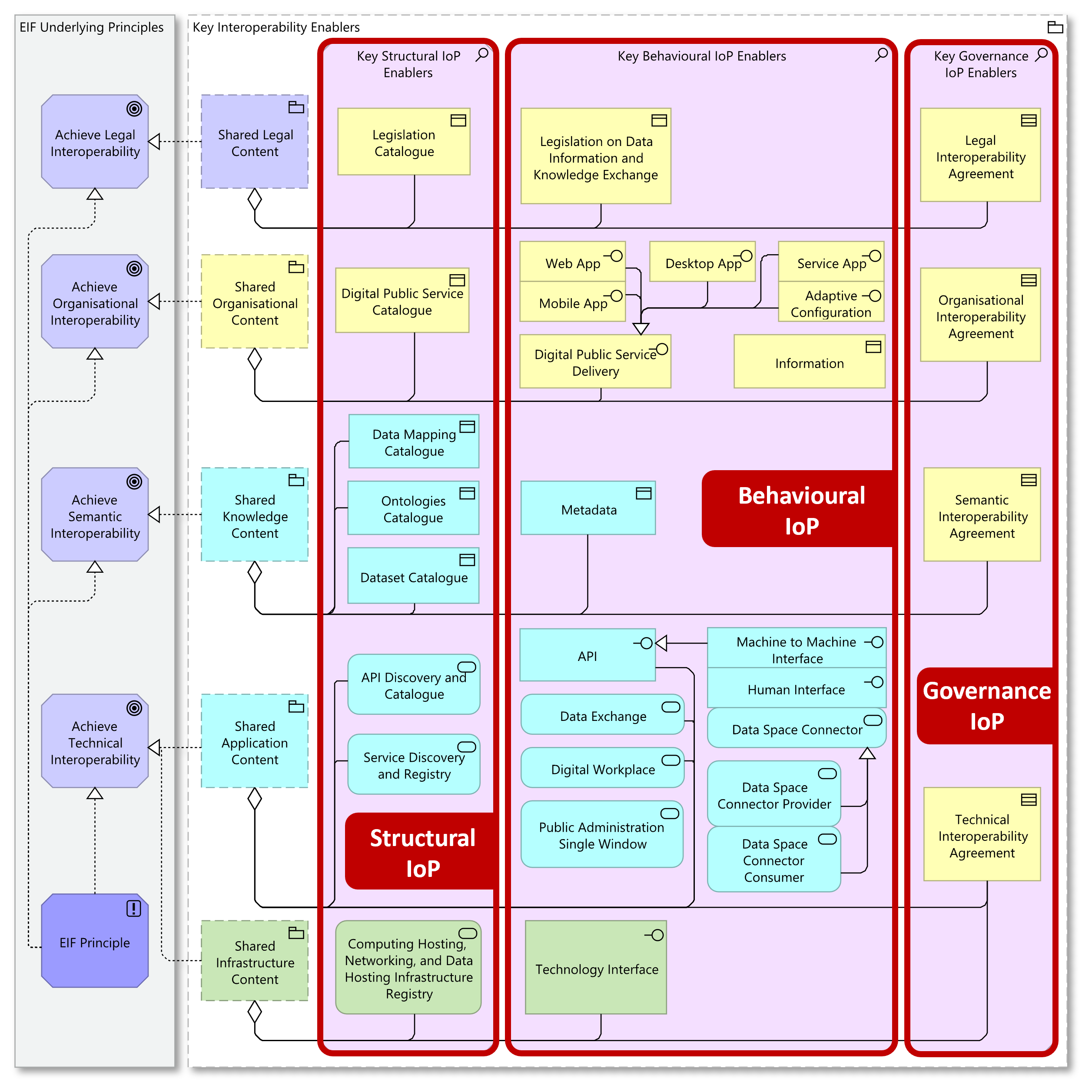

Besides its views and building blocks, the EIRA© provides insight and knowledge on different perspectives of the core architecture. Specific topics are addressed by means of viewpoints, diagrams that complement the four core views by taking a specific perspective considering the topic in question. Among these, and of particular interest for this guide, is the Key Interoperability Enablers viewpoint, which shows the key elements needed to achieve interoperability for the four core views, but based on different interoperability dimensions.

Figure 3: Interoperability dimensions and the Key Interoperability Enablers viewpoint (EIRA© v6.1.0)

These dimensions provide an interesting way to consider interoperability, as they define the nature of building blocks alongside their purpose. Three such dimensions are defined as follows:

Structural interoperability, pertaining to a solution’s structure, as manifested by the various components it consists of.

Behavioural interoperability, pertaining to a solution’s behaviour, in terms of data, information and knowledge exchanges with its environment.

Governance interoperability, pertaining to peer collaboration measures, agreements and shared policies, with stakeholders from external services.

Combining views, building block definitions, and interoperability dimensions, allows us to express and better understand a solution’s elements in terms of what they do and how they do it. This gives us interesting guidance when it comes to the solution’s conformance testing.

Conformance testing and interoperability dimensions

Conformance testing is the process of verifying that a solution correctly realises its requirements. In other words it helps us apply quality control over a solution’s design and implementation.

Addressing a solution’s design, meaning validating that its “blueprint” matches the requirements, the EIRA© proposes the EIRA© validator, a service taking as input an ArchiMate® model and producing a validation report. This report covers deviations from architecture blueprints (termed solution architecture templates), and highlights cases where the EIRA© modelling guidelines have not been correctly applied.

Note

The EIRA© validator is based on the Test Bed’s XML validator, validating ArchiMate® models represented in XML, using XML Schema and Schematron rules.

Moving onto conformance testing of a solution’s implementation is where the Test Bed comes in. Conformance testing here takes the form of scenario-based test cases that trigger and make assertions on the system’s perceived behaviour. This is typically achieved by sending and receiving messages to and from the system under test, using its APIs as defined by the requirements, and validating produced results.

Considering the previously mentioned interoperability dimensions, this falls under behavioural interoperability as we make assertions based on the system’s observed behaviour. In doing so however, we also implicitly test for structural interoperability given that the exhibited behaviour is only made possible by the components that constitute the system. It could of course be the case that certain expected behaviours can not be validated by interacting with the system from outside its boundaries, in which case we can foresee a manual submission and validation of evidences to reach a degree of assurance that the implementation is complete.

When we look at structural interoperability, and in particular the semantic building blocks used such as data models and ontologies, we can also consider the use of validators to complement conformance tests. The Test Bed defines validators as components that are configured with a specification’s validation artefacts, and serve in validating content over several APIs. Besides using validators as standalone services, these can also be integrated into conformance test cases to cover validation of exchanged data.

With respect to governance interoperability, conformance testing becomes less obvious given that we focus on collaboration rules and agreements between stakeholders. Even in this case however, we can typically extract common agreement elements that manifest as implementations within the systems under test, that can moreover be observed by interacting with them. As an example, a service level agreement stipulating minimum API response times can be tested by making calls, recording response times, and then asserting them against the required thresholds. Such an approach obviously can only be applied to a subset of topics, but nonetheless remains interesting to consider as part of a solution’s conformance testing design.

This guide focuses on governance interoperability to highlight the involved agreements and policies as a valid source of conformance test cases. When designing a solution’s conformance testing setup, we typically focus on testing the foreseen business processes and core technical elements. This guide, aims to highlight that governance-level requirements can also be partly tested for, allowing us to increase further our testing coverage and quality assurance.

The EIRA© Library of Interoperability Specifications (ELIS)

The EIRA© Library of Interoperability Specifications (ELIS) is a catalogue of best-in-class standards and specifications, categorised based on their relevant EIRA© architecture building blocks. When designing a solution, one can use the EIRA© to determine and express the building blocks needed, and then refer to the ELIS to determine the specifications to consider when implementing each building block (either through reuse or new development).

The selection of specifications is based on rigorous assessments by applying the Common Assessment Method for Standards and Specifications (CAMSS), whereby only the highest scoring specifications are included. These assessments are published in the ELIS alongside the specifications to provide transparency, and also to allow interested parties to go into further details if desired. Both the CAMSS and the ELIS are curated and maintained by the CAMSS support team, as part of the Interoperable Europe initiative.

As part of providing a curated library of interoperability specifications, the ELIS also implicitly becomes a valuable body of knowledge on numerous interoperability aspects. By examining the specifications proposed by the ELIS for a given EIRA© architecture building block, we can extrapolate common elements, requirements and guidelines that can help us design for interoperability. Considering these guidelines we can determine what is typically expected per topic, but also what we can most likely be able to test for as part of our conformance testing.

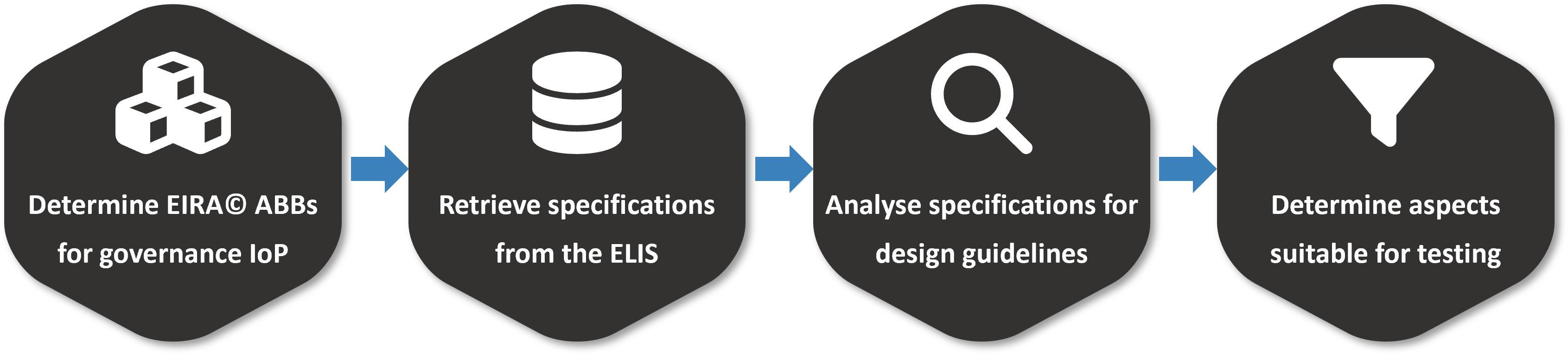

This guide follows this exact approach from the perspective of governance interoperability, helping us to identify common governance aspects we can include in our conformance testing. Specifically:

We use the EIRA© to determine the building blocks enabling governance interoperability.

For each building block we collect from the ELIS the proposed specifications.

We analyse the specifications to identify common aspects to treat as design guidelines.

We consider these guidelines to determine what can be included in conformance tests.

Figure 4: Extracting design guidelines and testing tips for governance interoperability

The following sections result from this work, proposing guidelines and conformance testing tips for Legal, Organisational, Semantic and Technical governance interoperability.

Legal governance

Legal governance involves the legal agreements that bind all involved stakeholders.

Legal interoperability agreements

Legal interoperability agreements aim to coordinate or harmonise efficient exchange models between digital public services within a Public Administration and/or cross Public Administrations.

Topic: Accessibility

Design guidelines

Public digital services must adhere to the principles of perceivability, operability, understandability, and robustness; ensuring full accessibility for users with disabilities.

Establish a standardised framework for regular monitoring and reporting of accessibility compliance across all digital public services.

Digital public services must include a user feedback mechanism specifically for accessibility issues, allowing users to report problems and request accessible alternatives.

Ensure robust enforcement mechanisms are in place to handle accessibility complaints, and enforce compliance with accessibility standards.

Conformance testing tips

From a conformance testing point of view, checking accessibility aspects would be focused on what can be observed in the involved solutions’ behaviour. Their technical implementation can be dynamically tested to ensure its conformance to the accessibility specifications in force (such as the Accessible Rich Internet Applications suite of specifications).

Such specifications typically involve accessibility metadata included in user interfaces, that test cases can request via messaging steps simulating users’ browsers, and then proceed to make accessibility-oriented assertions on the responses. An example of such assertions would be to check the produced responses to ensure that user interface elements include the necessary metadata attributes allowing narration for users with sight disabilities.

Finally, we can also foresee test cases that simulate various client configurations to ensure that responses are appropriate and consistent. We could simulate mobile devices or PC browsers, including browsers with accessibility features enabled, to ensure that all platforms result in appropriately customised, but also consistently usable, responses. Examples here would be the disabling of animations for users having relevant intolerances, or colour corrections to cater for colour-blind users.

Topic: Legal responsibilities

Design guidelines

Clearly define the roles and responsibilities of the different organisations responsible for the maintenance and delivery of public services.

Establish a collaborative governance structure that includes representatives from all involved parties, ensuring that decisions are made collectively and transparently.

Mandate the active participation of all relevant stakeholders in the planning, development, and implementation phases of public services.

Conformance testing tips

Such governance points cannot be practically tested via conformance tests.

Topic: Privacy

Design guidelines

Data must be processed lawfully, fairly, and transparently in relation to the data subject.

Data should be collected for specified, explicit, and legitimate purposes and not further processed in a manner incompatible with those purposes.

Data collected should be adequate, relevant, and limited to what is necessary for the purposes for which it is processed.

Data should be accurate and, where necessary, kept up to date.

Data should be kept in a manner permitting identification of data subjects for no longer than necessary for the purposes for which the data is processed.

Data must be processed in a manner that ensures appropriate security, including protection against unauthorised or unlawful processing, accidental loss, destruction, or damage.

Ensure any transfer of personal data to third countries or international organisations is based on adequacy decisions, appropriate safeguards, or binding rules.

Clearly define the responsibilities of data controllers and data processors, including adherence to data protection principles and ensuring adequate data security measures.

Establish procedures for the notification of data breaches to the relevant supervisory authority and, where appropriate, to the data subjects without undue delay.

Conformance testing tips

Focusing again on a system’s observable behaviour we need to see what is practically achievable in terms of compliance with data privacy requirements. One example of this would be the removal of private data when no longer needed but also upon explicit user request. In so far as it is possible, we could foresee test cases to trigger data processing via messaging steps, that before completing would assert that involved private data is no longer retrievable. To verify that all traces of such data have been removed, we could also consider manual interaction steps requesting evidence of the removal such as screenshots and log traces, that need to be assessed by conformance testing staff before completing.

Topic: Security and trust

Design guidelines

Maintain comprehensive records of processing activities and implement regular audits to ensure compliance with GDPR and eIDAS requirements.

Include clauses that ensure electronic identifications are recognised across member states, subject to the notified assurance levels and published conditions.

All parties involved in the service delivery should ensure that electronic identification schemes meet specific criteria to be eligible for notification, complying with the standards established by the eIDAS Regulation to facilitate interoperability.

Conformance testing tips

Electronic identification and trust schemes are aspects that come through in a solution’s observable operations. As such we can foresee conformance test cases to address them, by triggering message exchanges and ensuring that both identity management and trust are implemented according to specifications. This means that message exchanges in test cases should implement fully the identification and trust requirements, but you could also foresee a dedicated basic connectivity test suite to include tests focusing specifically on these points.

Through a custom messaging service implementation we can also foresee testing of “unhappy flows” where the Test Bed generates invalid messages on purpose, expecting them to be rejected. Good examples here are trust-related checks, by either manipulating signatures or adapting standard signing algorithms of otherwise correct messages. In such cases you would use verify steps to assert that the system under test rejects such messages due to trust problems even though the payload is valid.

Note that validating full conformance for identification and trust schemes may well be the focus of dedicated conformance testing services linked to those building blocks, that are overkill to replicate as part of a specific solution’s conformance testing focusing on business processes. Nonetheless, even in such cases it is interesting to foresee the basic connectivity test suite discussed earlier to establish that the overall setup works before proceeding with business-oriented test sessions. Such a test suite is a prime example of what is termed a shared test suite, meaning a test suite that can be included in several specifications as a prerequisite but that only needs to be completed once.

A good example of this practice is eDelivery, that has its own conformance testing service for access point implementations. Projects using eDelivery define their own separate conformance testing service, expecting use of conformant access points and only a limited set of connectivity tests as a prelude to testing their own business processes.

Organisational governance

Organisational governance pertains to the agreements in place to define and facilitate collaboration within and across organisations.

Agreements on data sharing

Agreements on data sharing formalise the information requirements, syntax bindings, protocols and semantic artefacts that must be used for the exchange of data.

Topic: Data sharing

Design guidelines

Data quality standards must be met, including accuracy, completeness, and timeliness. Metadata must be provided in accordance with agreed metadata standards.

Use secure protocols to protect data during transmission, providing encryption and ensuring data integrity.

Define the use of APIs used for data exchange.

Specify the protocols for transferring large data files or batch data.

Foresee messaging and queuing protocols for asynchronous data exchange.

Manage communication protocol versioning to ensure compatibility and manage updates. Stakeholders must be informed of any changes to agreed protocols.

Data must be formatted and structured according to agreed syntax standards.

Conformance testing tips

Use of data and metadata standards are prime testing candidates, for which validators can be configured depending on the syntax in question (see for XML, RDF, JSON and CSV). Such validators can be used as standalone tools provided to a project’s community, but also integrated in test cases by means of verify steps to validate exchanged data.

Regarding use of secure protocols, involved APIs, asynchronous messaging and other data exchange aspects, this should be the key focus of test cases. Scenarios should be foreseen based both on sending and receiving messages, validating multi-step exchanges for consistency, and validating edge cases resulting from “unhappy flows”.

In terms of messaging design for test cases, the best practice is to consider entire business processes involving multiple exchange steps between the Test Bed and the system under test. Besides validating messages exchanged at each step, this allows you also to check that each message in the overall sequence is coherent with respect to its predecessors, by correctly referring to selected data and correlation identifiers. It is also a good practice to try and make each test session unique, by generating random data or selecting randomly elements from an agreed test data set. Doing so ensures that the system under test not only produces valid messages, but that it does so taking into account the specific test data of each test session.

Having said this, nothing prevents you from also defining more focused or technical-oriented test cases for individual message types, where messages are tested in isolation. In doing so you will effectively be introducing a set of unit tests that complements the test cases addressing business processes as a whole.

Agreements on the use of common infrastructure

Agreements on the use on common infrastructure outline the terms and conditions for sharing and utilising a specific infrastructure or facility between multiple parties.

Topic: Infrastructure usage

Design guidelines

Specify the financial arrangements for using the shared infrastructure. Ensure cost-sharing models support the objective of creating economic value from data by enabling cost-efficient access to data infrastructure.

Ensure each party is explicitly entitled to access and utilise the infrastructure as per the agreed terms; and is made responsible for adhering to usage policies, maintenance, and reporting of issues.

Define the security protocols to protect the shared infrastructure that must be followed by all parties. Security measures should comply with requirements for safeguarding data, including the protection of sensitive and private data.

Outline the terms and conditions under which the infrastructure can be accessed and used. Access to the infrastructure will be granted upon request and under the condition that usage does not exceed allocated resources.

Conformance testing tips

Such points have no bearing on a system’s perceived behaviour and cannot be practically tested via conformance tests.

Privacy frameworks and agreements

Privacy frameworks enable the confidentiality aspects of data, information and knowledge assets; and the organisational resources handling them. Agreements on privacy enable a set of rules for the personal data of individuals’ collection, processing and transference by Public Administrations.

Topic: Privacy

Design guidelines

The service provider must comply with all relevant data protection regulations, ensuring that personal data is processed lawfully, fairly, and transparently.

Explicit consent must be obtained from data subjects before processing their personal data, and mechanisms for managing and withdrawing consent must be provided.

Personal data must be anonymised or pseudonymised where possible to enhance privacy and reduce the risk of identification.

Only the minimum amount of personal data required for the service will be collected and processed, in accordance with the principle of data minimisation.

Regular Data Privacy Impact Assessments (DPIAs) must be conducted to identify and mitigate risks to personal data.

The service provider must provide mechanisms for data subjects to exercise their rights, including access, rectification, erasure, and data portability.

Conformance testing tips

A solution’s data protection implementation can be partially tested based on its exhibited behaviour. We can foresee test cases involving message exchanges in which test data is used that is considered for testing purposes as being of a private nature. As part of these tests we can add assertions following exchanges to ensure that the data in question is removed when no longer needed. This ideally would be done in an automated manner through specific additional requests, but could otherwise be achieved manually by providing evidence via interact steps (e.g. a screenshot or log extract). Following evidence submission by the tester, a second administrator-level interact step would then follow, allowing an administrator to verify that processing was correct, before completing the test session.

The anonymisation and pseudonymisation of data can also be tested for through relevant exchanges. For example, tests can be based on an agreed test dataset with mock private information, for which we can add assertions following exchanges to ensure that anonymisation and pseudonymisation have taken place. It is interesting to note that anonymisation and pseudonymisation can also take place on data managed by the Test Bed, by means of a custom extension that is used before data is processed. Depending on what is more meaningful, this could be done as part of a send step using a custom messaging implementation, or considered as a separate utility used through a process step that delegates to a custom processing service.

Security frameworks and agreements

Security frameworks enable the protection of various aspects of data, information and knowledge assets and the organisational resources handling them. Agreements on interoperability security formalise governance rules and conditions to grant the identification, authorisation and transmission of the data, information and knowledge being exchanged between digital public services.

Topic: Security and trust

Design guidelines

Ensure compliance with all legal, regulatory, and internal security policies. Regular audits and assessments must be conducted to ensure compliance and identify areas for improvement.

Ensure allocation of sufficient resources (financial, technical and human) to implement, maintain and continuously improve security measures.

Define risk management processes to identify, assess, and mitigate risks associated with the use and sharing of digital resources. These processes should also be subject to regular risk assessments.

Involve all relevant internal and external stakeholders in security planning and decision-making processes. Regular communication and collaboration are required to ensure comprehensive security measures.

Adhere to established security standards and best practices. Regular reviews must be performed to ensure compliance is maintained.

Ensure all partners and suppliers undergo a thorough vetting process to ensure they meet security standards and can be trusted with sensitive data. Regular audits must be conducted to ensure trustworthiness is maintained.

Conformance testing tips

Although most topics here refer to organisational alignment on security and trust, we could foresee test cases covering the aspects that would be reflected in a solution’s implementation. The primary focus here would be the agreed security standards in so far as they pertain to the solution’s operational security. As an example one could envision test cases focusing on key points of the OWASP Application Security Verification Standard (ASVS) in which the solution’s behaviour is assessed through tailored message exchanges.

Given the extensive requirements of a standard such as the OWASP ASVS, it would most likely be overkill to implement each of these as a distinct test case. Keep in mind that the focus of most solutions’ conformance testing is the business processes it implements, which by themselves could result in hundreds of test cases without even considering aspects such as security. In addition, note that the Test Bed is not a penetration testing solution; there are several purpose-built specific tools available, that provide out-of-the-box testing and reporting features specifically targetting security.

Nonetheless, it is always beneficial to provide at least some coverage for non-functional aspects such as security, by means of a limited set of core test cases that cherry-pick key requirements to test. By requiring this test suite to be completed alongside business-oriented tests, we increase our assurance of the tested systems’ quality, and at least raise awareness on the importance of such aspects.

Semantic governance

Semantic governance pertains to the policies and agreements ensuring that all parties share a common understanding and treatment of semantic assets.

Data policies

Data policies aim to form the guiding framework in which data management can operate.

Topic: FAIR principles compliance

Design guidelines

Ensure data is Findable through standardised metadata, unique and persistent identifiers, and data indexing in searchable repositories.

Ensure data is Accessible through clear access protocols, authentication and authorisation procedures if necessary, and archiving for long-term availability.

Ensure data is Interoperable through use of open and standard data formats, shared vocabularies and ontologies, and cross-referencing with other datasets and standards.

Ensure data is Reusable by means of clear licencing, detailed documentation, and continuous quality assurance processes to ensure data is valid and reliable.

Conformance testing tips

Aspects such as the use of specific data and metadata standards, vocabularies, ontologies and syntaxes can all be tested through test cases. The Test Bed includes several built-in validation capabilities to do so but also offers the possibility of defining full validators that are configured with the target specifications’ validation artefacts (e.g. SHACL shapes configured in a RDF validator instance). Such validators are usable as standalone services, but also within test cases as the implementation of verify steps.

Concerning data retrieval channels we can test aspects such as access protocols, as well as authentication and authorisation, by having test cases simulate data consumers. Moreover test cases can be based on an agreed test dataset, from which specific data is requested depending on the scenario being tested.

Topic: Legal compliance

Design guidelines

Ensure adherence to relevant regulations on data openness and reuse of public sector information.

Reuse requests should be processed in accordance with EU regulations. The conditions under which data can be reused should be clarified, ensuring compliance with the relevant directives.

Conformance testing tips

It could be possible to test that a solution expected to publish open data is doing so correctly. The details of how and in what form open data is published would be specific to the solution in question, but we could extrapolate a common approach whereby we assert that collected test data is published as expected.

For cases where data to publish is pushed to the system under test, we can imagine using send steps to simulate data providers. Likewise, if data to be published is harvested by the system under test, we could do so through receive steps that are configured to respond with test data once requested by the system. Regardless of how data is collected, we would then request to retrieve it once published, and make assertions to ensure it matches what was collected, and is exposed over the expected access protocols.

Interestingly, we could foresee additional test cases to validate that expected quality control processes are in place. This could be done through “unhappy flow” tests, whereby the test data to be published is intentionally invalid or of low quality. In these cases the assertions would be reversed by expecting that the system under test prevented bad quality data from being published.

Semantic interoperability agreements

Semantic interoperability agreements formalise governance rules enabling collaboration between digital public services with ontological value.

Topic: Data exchange

Design guidelines

Ensure that data flowing across systems, services, and devices is identified and classified based on types, formats, structures, and sensitivity.

Define clear data flow paths between the components realising the digital public service. Specify any transformations or processing steps that occur during data flow.

Conformance testing tips

The data flows foreseen by a solution represent the key focus point of any conformance testing campaign. In GITB TDL test cases these are tested by means of messaging steps, using either built-in capabilities, or custom extensions for protocols without built-in support or where finer control is needed.

You should ensure that all foreseen flows are covered by test cases, including also processes that entail multiple exchanges to ensure conversational consistency. In addition, don’t forget to add “unhappy flow” test cases that purposely make invalid exchanges, to ensure edge cases and error handling are correctly implemented.

Topic: Data modelling

Design guidelines

Use standardised semantic data models ensuring models are simplified, reusable, and extensible, capturing the fundamental characteristics of data exchanged by stakeholders.

Regularly check and ensure compliance with the adopted semantic data models. Keep the data models updated and maintain them as necessary to reflect changes in standards or requirements.

Provide support and training to involved stakeholders to ensure proper implementation of the data models.

Conformance testing tips

Validation of data models is extensively supported by the Test Bed’s validators. These follow a configuration-driven approach whereby validation artifacts are configured over reusable core components covering most popular syntaxes (XML, RDF, JSON and CSV). These are integrated in test cases using verify steps, and can even be supplied with custom artefacts generated during the course of test sessions to validate specific scenarios.

Versioning updates in the semantic standards used can also be tested by updating our test suites’ validation artefacts. When doing so we can create new versions of the test suites and their related specifications, to distinguish tests carried out for earlier releases. This allows us to maintain the systems’ testing history for reference, while having clarity over the testing progress for latest releases.

Topic: Metadata use and management

Design guidelines

Use descriptive core metadata elements common to all exchange domains.

Define and include additional extension metadata elements specific to particular exchanges.

Ensure metadata quality through validation processes. Focus on an automated testing approach to identify and report inconsistencies or errors.

Use specialised tools to effectively manage and integrate metadata standards, offering functionalities such as metadata cataloguing, search and discovery, and governance.

Establish governance frameworks to ensure metadata accuracy, consistency, and compliance.

Foresee automatic discovery and harvesting or metadata from various data sources.

Implement a change management process to handle updates and modifications to metadata schemas, and use version control systems to track changes.

Conformance testing tips

Metadata quality control should be a first class concern alongside the quality of data. Metadata would typically be expressed through RDF ontologies that can easily be validated using SHACL shapes via an RDF validator instance. Besides using such validators as standalone tools, they can also be integrated in test cases through verify steps. Moreover, validation in test cases can be extended with custom shapes, generated on-the-fly using templates and data from the current test session. Doing so ensures not only that metadata is correct but also that it matches each specific test scenario.

Besides validating metadata, test cases can also be defined to validate their discovery and harvesting. The relevant APIs and involved exchanges can be simulated by the Test Bed, ensuring that systems under test correctly implement their specifications. This can be achieved through messaging steps and potentially supported by a custom messaging service.

Finally, it is interesting to note automated quality assurance is well supported by the Test Bed. Validators expose machine-to-machine APIs and extensive configuration options making it simple to include them in automated quality control workflows. For more elaborate tests, the GITB Test Bed software itself also exposes a REST API that allows you to deploy test suites and execute tests in a “headless” manner as part of continuous integration processes.

Topic: Ontology design

Design guidelines

Ensure the use of established Ontology Design Patterns (ODPs) to promote semantic interoperability and consistency. Adopt ODPs from recognised sources such as the W3C and other standardisation bodies.

Conduct regular reviews of the ontologies to ensure ongoing compliance with the adopted ODPs.

Establish conformance checks to verify that ontologies adhere to the selected patterns and best practices.

Promote continuous improvement by updating ODPs and ontology development processes based on feedback and evolving standards.

Use well-established ontology languages to define and instantiate ODPs such as OWL (Web Ontology Language), RDFS (RDF Schema) and SKOS (Simple Knowledge Organisation System).

Ensure comprehensive documentation for each ODP including its purpose, structure, usage and reuse guidelines, and implementation workflow.

Use specialised tools compatible with standardised formats that include key functionalities (ontology editing, visualisation, reasoning and validation) for the proper and consistent development of ontologies and semantic datasets.

Conformance testing tips

Validating the correct use of ontologies is a key use case for the Test Bed’s RDF validator, making use of the ontology’s foreseen validation artefacts (typically SHACL shapes). Besides validating for an RDF graph’s basic correctness, a validator can also be configured to check best practices and design patterns, both specific to the ontology as well as extensions foreseen for a given solution.

A good example of this is the SEMIC Style Guide validator, that checks ontologies based on the eGovernment Core Vocabularies for alignment with the SEMIC Style Guide. With respect to the SHACL shapes defining conformance rules for an ontology, the Test Bed offers a SHACL shape validator, that can be used to validate the shapes themselves against the SHACL specification and curated best practices. Such validations checks would take place typically as part of the development of the ontologies in question, and could also be replicated in conformance tests to ensure different parties’ alignment.

Topic: Standards compliance

Design guidelines

Ensure compliance with recognised semantic standards such as the Core eGovernment Vocabularies to ensure uniformity and interoperability across systems.

Ensure compliance with recognised metadata standards such Schema.org.

Conformance testing tips

Testing conformance to such semantic standards is a classic Test Bed use case. Typically all test cases involving data exchanges should include verify steps that validate the data against the expected validation artefacts. This can be done by using the Test Bed’s built-in validation capabilities, or by delegating to custom validator instances.

Interestingly, when dealing with recognised data and metadata standards, there is also a good possibility that the standard is supported by an already existing public validator which can be integrated as-is in test cases. This is possible given that all the Test Bed’s validator instances by default also implement the GITB validation service API that allows them to be used in GITB TDL test cases as the implementation of verify steps. An example of such a validator is the public DCAT-AP validator maintained by the SEMIC team.

With regards to reusing the eGovernment Core Vocabularies in particular, the Test Bed hosts the SEMIC Style Guide validator. This is a validator also maintained by the SEMIC team, allowing ontology developers to test for alignment against the guidelines listed in the SEMIC Style Guide.

Technical governance

Technical governance pertains to the agreements on technical processes and operations, enabling knowledge sharing across Public Administrations to achieve public policy goals.

Service Level Agreements (SLAs)

Service Level Agreements (SLAs) formalise the agreement between a service provider and consumer, outlining expected service levels, as well as the metrics to measure the service’s quality.

Topic: Metrics and reporting

Design guidelines

Foresee performance metrics such as response times, throughput, and latency; defining altogether the solution’s expected performance characteristics.

Foresee scalability metrics such as load handling and elasticity; defining the solution’s expected behaviour when subjected to increasing or decreasing load.

Foresee reliability metrics such as error rates, mean time between failures, and mean time to repair; defining how the solution should handle errors.

Establish mechanisms for regular reporting and analysis of established metrics. Regular audits should also be foreseen to ensure continuous alignment.

Conformance testing tips

Expected response times and throughput could be good testing candidates by generating a batch of typical requests for the system, and observing the produced responses to measure processing times and completion within the expected time window. Similarly, SLA metrics pertaining to characteristics of individual requests (e.g. maximum supported payload sizes) could also be tested for by configuring appropriate data for such edge cases. A good approach to realise such tests would be to use a custom messaging or processing service, that the test case would signal to carry out the requests on its behalf. This service would then call the system under test as needed, and report a summary of the calls and observed responses as the relevant test step’s report.

Error and edge cases are also ideal candidates for “unhappy flow” test cases, as here you can benefit from the fact that you are using the Test Bed and not an actual implementation as the counterpart of the system under test. A real-world implementation of the specifications would likely be unable to “behave badly”, something that in contrast is perfectly achievable (and testable) through the Test Bed.

Having said this, keep in mind that metrics linked to load testing would ideally be validated by specialised stress testing tools, and not the Test Bed. In addition, metrics such as availability that relate to observing the system over a period of time, would also be impractical to test.

Topic: Review processes

Design guidelines

Specify the frequency of SLA reviews to ensure they remain relevant, effective, and aligned with evolving business needs, technological advancements, and regulatory requirements.

Define the procedure for SLA reviews, including stakeholder meetings and feedback collection to identify important service features and appropriate metrics.

Describe the SLA change management process, clarifying how amendments are proposed, approved, documented, and planned for implementation.

Conformance testing tips

The Test Bed would naturally not be used to validate how SLAs are reviewed and updated. However, this topic highlights the fact that SLAs evolve over time and when doing so, incur changes on the systems under test. Having defined test cases for the SLA metrics that are practically testable, will allow you to test that implementations conform to the requirements listed therein before SLA changes are brought into force.

Topic: Roles and responsibilities

Design guidelines

Identify the roles and responsibilities of all parties involved in the service’s delivery.

Define for all involved parties, their respective points of contact and communication channels.

Define the escalation procedures for addressing issues or concerns, and the manner with which efficient decision-making can be sought.

Conformance testing tips

Such aspects are more human-oriented and cannot be practically tested via the Test Bed.

Topic: Security and data protection

Design guidelines

Ensure data protection by specifying encryption standards, data backup procedures, and data access controls. Include specifications regarding security standards, vulnerabilities, and steps taken to protect digital solutions from breaches or unauthorised access.

Ensure compliance to relevant security regulations and standards.

Describe incident response procedures for detecting, reporting, and responding to security breaches.

Conformance testing tips

Anything related to the setup of the communication channel with the system under test can be easily tested. In fact using the Test Bed gives us the possibility to manipulate aspects such as encryption algorithms to use ones that are expected to be rejected. Moreover, doing this through a custom messaging service triggered from a test case, gives us the possibility to configure the channel per tested scenario, and in a manner that correctly implemented software would normally not do.

Similarly, requirements pertaining to access control can also be tested by including test cases where invalid authorisation attempts are triggered and are expected to be rejected by the system under test.

A set of tests focusing on selected security aspects like the ones mentioned above, could be defined in what the Test Bed terms a shared test suite. Such test suites can be linked to multiple business-oriented conformance statements as a set of additional tests to be completed at least once before claiming conformance.

Topic: Service availability

Design guidelines

Define uptime guarantees as the percentage of time the service is expected to be available.

Define clear periods for scheduled maintenance when the service may be unavailable to support maintenance work. Ensure advance notice for users about the scheduled maintenance windows.

Define how unplanned outages are managed, and how users are informed about the status and resolution of issues.

Conformance testing tips

Guarantees on uptime and planned or unplanned downtime, are not practically testable through the Test Bed. Such measures are typically listed as percentage values over time and would require constant monitoring of the system under test over an extended period.

Technical interoperability agreements

Technical interoperability agreements formalise the technical specifications for organisations, to ensure consistent operations under varying technical frameworks.

Topic: Joint processes improvement

Design guidelines

Ensure all parties agree to adhere to governance frameworks serving as tools to enhance processes, ensuring efficiency, effectiveness, and alignment with industry standards and best practices.

Ensure commitment to a continuous evaluation and enhancement of processes, including periodic reviews to assess the effectiveness of adopted frameworks and their adaptation for ongoing alignment with organisational goals and industry best practices.

Conformance testing tips

Such governance and process-oriented points cannot be practically tested via the Test Bed.

Topic: Security and trust

Design guidelines

Ensure all parties agree to ensure compliance with established security principles and best practices for secure data transmission, irrespective of specific security policies.

Enable trust verification by securing communications using certificates issued by trusted Certificate Authorities (CAs).

Under no circumstances tolerate the use of self-signed certificates of certificates issued from untrusted CAs.

The configuration of Transport-Layer Security (TLS) should be kept up-to-date with latest encryption and security standards, and never be downgraded to accommodate parties technical deficiencies.

Ensure the continuous monitoring and evaluation of all components to ensure ongoing compliance with established security principles.

Conformance testing tips

Testing of certificate trust and correct TLS configuration are perfectly achievable through the Test Bed. This can be done through test cases that will initiate communications using on purpose self-signed certificates or obsolete encryption protocols, expecting the communication to be rejected by the system under test.

Similarly, further tests cases can use valid certificates and focus on trust issues in exchanged payloads. A typical test would be to have a test case produce a valid message, sign it with a trusted certificate, but then adapt the message before being sent. The test case would then include verify steps as assertions to make sure that the system under test detected the manipulation and responded with the expected error message.

The simplest way to achieve such tests would be through a custom messaging service that will use an invalid configuration when requested to do so by the calling test case. This could be signalled to the service by means of a special input flag of the relevant send or receive step.

Topic: Technical collaboration

Design guidelines

Use agreements to establish collaborative frameworks between organisations to facilitate the exchange of knowledge, expertise, and resources.

Align priorities and coordination activities to prevent duplication of effort and maximise the impact of standardisation initiatives.

Include measures to enhance the traceability of adopted standards, ensuring alignment with international best practices and regulatory requirements.

Conformance testing tips

Such points pertaining to collaborative agreements cannot be practically tested via the Test Bed.

Summary

Congratulations! Having completed this guide you should now have a better awareness of governance aspects, and how to design involved agreements to enable interoperability. Besides this, you should now also be aware that such agreements can provide a good source for conformance test cases, and that doing so will increase your testing coverage beyond only your project’s business processes. Finally, the guide highlighted typical points you can practically test for, and initial ideas on how this can be achieved.

See also

If you are interested in more details on the background and foundation of this guide you can:

Check the EIRA© for more information and other EIRA© solutions not discussed here.

Check the CAMSS for more details on its assessments and related solutions.

Explore yourself the standards and specifications included in the ELIS.

Regarding conformance testing, if you are unfamiliar with the Test Bed you can start from its Joinup space for a first introduction. You can then follow-up with the Test Bed’s value proposition that presents its key services and use cases.

For more technical information on conformance testing your starting point should be the Test Bed’s developer onboarding guide. From here you can then check the more detailed documentation that was also referenced frequently during this guide:

The GITB Test Description Language (TDL) documentation on creating test cases.

The GITB test services documentation on creating custom test service extensions.

At several points this guide also mentioned validators, components that can used as standalone services and as test case validation steps. If you would like to know more and see how to create such validators you can check:

The XML validation guide, for XML validators using XML Schema and Schematron.

The RDF validation guide, for RDF validators using SHACL shapes.

The JSON validation guide, for JSON validators using JSON Schema.

The CSV validation guide, for CSV validators using Table Schema.